12 Greatest Massive Language Fashions (LLMs) in 2023

If you’re discussing know-how in 2023, you merely can’t ignore trending subjects like Generative AI and huge language fashions (LLMs) that energy AI chatbots. After the discharge of ChatGPT by OpenAI, the race to construct one of the best LLM has grown multi-fold. Massive firms, small startups, and the open-source neighborhood are working to develop probably the most superior giant language fashions. To date, greater than a whole lot of LLMs have been launched, however that are probably the most succesful ones? To search out out, observe our checklist of one of the best giant language fashions (proprietary and open-source) in 2023.

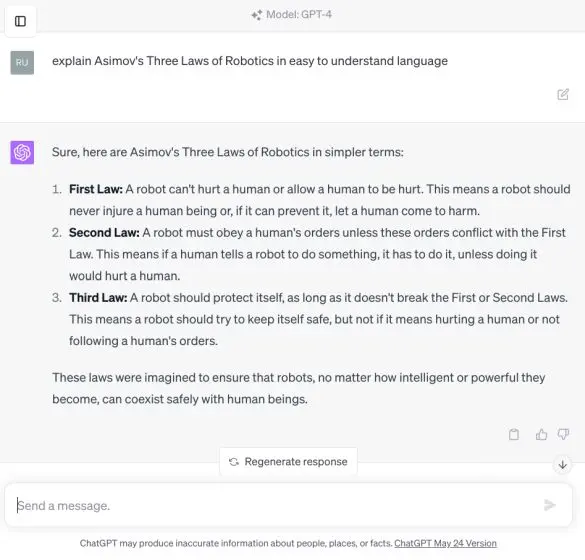

1. GPT-4

The GPT-4 mannequin by OpenAI is one of the best AI giant language mannequin (LLM) accessible in 2023. Launched in March 2023, the GPT-4 mannequin has showcased large capabilities with complicated reasoning understanding, superior coding functionality, proficiency in a number of tutorial exams, abilities that exhibit human-level efficiency, and way more

Actually, it’s the primary multimodal mannequin that may settle for each texts and pictures as enter. Though the multimodal skill has not been added to ChatGPT but, some customers have gotten entry through Bing Chat, which is powered by the GPT-4 mannequin.

Other than that, GPT-4 is among the only a few LLMs that has addressed hallucination and improved factuality by a mile. Compared to ChatGPT-3.5, the GPT-4 mannequin scores near 80% in factual evaluations throughout a number of classes. OpenAI has additionally labored at nice lengths to make the GPT-4 mannequin extra aligned with human values utilizing Reinforcement Studying from Human Suggestions (RLHF) and adversarial testing through area consultants.

The GPT-4 mannequin has been educated on a large 1+ trillion parameters and helps a most context size of 32,768 tokens. Till now, we didn’t have a lot details about GPT-4’s inner structure, however just lately George Hotz of The Tiny Corp revealed GPT-4 is a combination mannequin with 8 disparate fashions having 220 billion parameters every. Mainly, it’s not one massive dense mannequin, as understood earlier.

Lastly, you need to use ChatGPT plugins and browse the net with Bing utilizing the GPT-4 mannequin. The one few cons are that it’s gradual to reply and the inference time is far greater, which forces builders to make use of the older GPT-3.5 mannequin. Total, the OpenAI GPT-4 mannequin is by far one of the best LLM you need to use in 2023, and I strongly advocate subscribing to ChatGPT Plus should you intend to make use of it for critical work. It prices $20, however should you don’t need to pay, you need to use ChatGPT 4 free of charge from third-party portals.

2. GPT-3.5

After GPT 4, OpenAI takes the second spot once more with GPT-3.5. It’s a general-purpose LLM just like GPT-4 however lacks experience in particular domains. Speaking concerning the execs first, it’s an extremely quick mannequin and generates an entire response inside seconds.

Whether or not you throw artistic duties like writing an essay with ChatGPT or arising with a marketing strategy to become profitable utilizing ChatGPT, the GPT-3.5 mannequin does a splendid job. Furthermore, the corporate just lately launched a bigger 16K context size for the GPT-3.5-turbo mannequin. To not neglect, it’s additionally free to make use of and there aren’t any hourly or each day restrictions.

That stated, its greatest con is that GPT-3.5 hallucinates loads and spews false info regularly. So for critical analysis work, I gained’t recommend utilizing it. However, for fundamental coding questions, translation, understanding science ideas, and artistic duties, the GPT-3.5 is an effective sufficient mannequin.

Within the HumanEval benchmark, the GPT-3.5 mannequin scored 48.1% whereas GPT-4 scored 67%, which is the very best for any general-purpose giant language mannequin. Take note, GPT-3.5 has been educated on 175 billion parameters whereas GPT-4 is educated on greater than 1 trillion parameters.

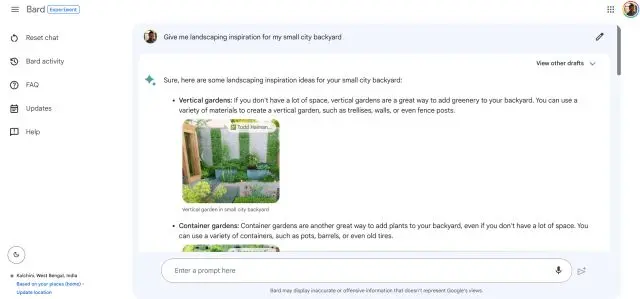

3. PaLM 2 (Bison-001)

Subsequent, we now have the PaLM 2 AI mannequin from Google, which is ranked among the many greatest giant language fashions of 2023. Google has targeted on commonsense reasoning, formal logic, arithmetic, and superior coding in 20+ languages on the PaLM 2 mannequin. It’s being stated that the most important PaLM 2 mannequin has been educated on 540 billion parameters and has a most context size of 4096 tokens.

Google has introduced 4 fashions based mostly on PaLM 2 in several sizes (Gecko, Otter, Bison, and Unicorn). Of which, Bison is offered at the moment, and it scored 6.40 within the MT-Bench take a look at whereas GPT-4 scored a whopping 8.99 factors.

That stated, in reasoning evaluations like WinoGrande, StrategyQA, XCOPA, and different exams, PaLM 2 does a outstanding job and outperforms GPT-4. It’s additionally a multilingual mannequin and may perceive idioms, riddles, and nuanced texts from totally different languages. That is one thing that different LLMs battle with.

Yet another benefit of PaLM 2 is that it’s very fast to reply and gives three responses directly. You’ll be able to observe our article and take a look at the PaLM 2 (Bison-001) mannequin on Google’s Vertex AI platform. As for customers, you need to use Google Bard which is operating on PaLM 2.

4. Claude v1

In case you’re unaware, Claude is a strong LLM developed by Anthropic, which has been backed by Google. It has been co-founded by former OpenAI staff and its strategy is to construct AI assistants that are useful, sincere, and innocent. In a number of benchmark exams, Anthropic’s Claude v1 and Claude On the spot fashions have proven nice promise. Actually, Claude v1 performs higher than PaLM 2 in MMLU and MT-Bench exams.

It’s near GPT-4 and scores 7.94 within the MT-Bench take a look at whereas GPT-4 scores 8.99. Within the MMLU benchmark as effectively, Claude v1 secures 75.6 factors, and GPT-4 scores 86.4. Anthropic additionally turned the primary firm to supply 100k tokens as the most important context window in its Claude-instant-100k mannequin. You’ll be able to principally load near 75,000 phrases in a single window. That’s completely loopy, proper? If you’re , you possibly can try our tutorial on how one can use Anthropic Claude proper now.

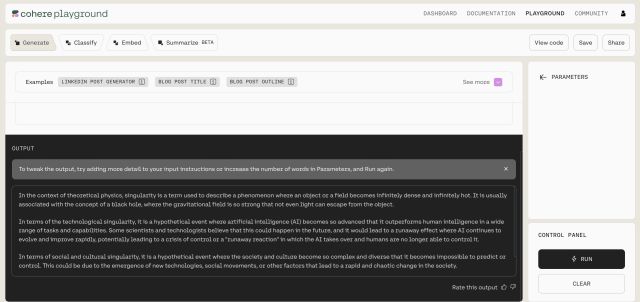

5. Cohere

Cohere is an AI startup based by former Google staff who labored on the Google Mind workforce. Considered one of its co-founders, Aidan Gomez was a part of the “Consideration is all you Want” paper that launched the Transformer structure. Not like different AI corporations, Cohere is right here for enterprises and fixing generative AI use instances for firms. Cohere has a lot of fashions from small to giant — having simply 6B parameters to giant fashions educated on 52B parameters.

The latest Cohere Command mannequin is profitable reward for its accuracy and robustness. In accordance with Standford HELM, the Cohere Command mannequin has the very best rating for accuracy amongst its friends. Other than that, corporations like Spotify, Jasper, HyperWrite, and so forth. are all utilizing Cohere’s mannequin to ship an AI expertise.

When it comes to pricing, Cohere prices $15 to generate 1 million tokens whereas OpenAI’s turbo mannequin prices $4 for a similar quantity of tokens. However, by way of accuracy, it’s higher than different LLMs. So should you run a enterprise and on the lookout for one of the best LLM to include into your product, you possibly can check out Cohere’s fashions.

6. Falcon

Falcon is the primary open-source giant language mannequin on this checklist, and it has outranked all of the open-source fashions launched to date, together with LLaMA, StableLM, MPT, and extra. It has been developed by the Know-how Innovation Institute (TII), UAE. The very best factor about Falcon is that it has been open-sourced with Apache 2.0 license, which suggests you need to use the mannequin for industrial functions. There aren’t any royalties or restrictions both.

To date, the TII has launched two Falcon fashions, that are educated on 40B and 7B parameters. The developer means that these are uncooked fashions, however if you wish to use them for chatting, you need to go for the Falcon-40B-Instruct mannequin, fine-tuned for many use instances.

The Falcon mannequin has been primarily educated in English, German, Spanish, and French, however it may possibly additionally work in Italian, Portuguese, Polish, Dutch, Romanian, Czech, and Swedish languages. So in case you are occupied with open-source AI fashions, first check out Falcon.

7. LLaMA

Ever since LLaMA fashions leaked on-line, Meta has gone all-in on open-source. It formally launched LLaMA fashions in numerous sizes, from 7 billion parameters to 65 billion parameters. In accordance with Meta, its LLaMA-13B mannequin outperforms the GPT-3 mannequin from OpenAI which has been educated on 175 billion parameters. Many builders are utilizing LLaMA to fine-tune and create among the greatest open-source fashions on the market. Having stated that, do take into account, LLaMA has been launched for analysis solely and may’t be used commercially in contrast to the Falcon mannequin by the TII.

Speaking concerning the LLaMA 65B mannequin, it has proven superb functionality in most use instances. It ranks among the many high 10 fashions in Open LLM Leaderboard on Hugging Face. Meta says that it has not used any proprietary materials to coach the mannequin. As an alternative, the corporate has used publicly accessible information from CommonCrawl, C4, GitHub, ArXiv, Wikipedia, StackExchange, and extra.

Merely put, after the discharge of the LLaMA mannequin by Meta, the open-source neighborhood noticed speedy innovation and got here up with novel methods to make smaller and extra environment friendly fashions.

8. Guanaco-65B

Among the many a number of LLaMA-derived fashions, Guanaco-65B has turned out to be one of the best open-source LLM, simply after the Falcon mannequin. Within the MMLU take a look at, it scored 52.7 whereas the Falcon mannequin scored 54.1. Equally, within the TruthfulQA analysis, Guanaco got here up with a 51.3 rating and Falcon was a notch greater at 52.5. There are 4 flavors of Guanaco: 7B, 13B, 33B, and 65B fashions. All the fashions have been fine-tuned on the OASST1 dataset by Tim Dettmers and different researchers.

As to how Guanaco was fine-tuned, researchers got here up with a new approach known as QLoRA that effectively reduces reminiscence utilization whereas preserving full 16-bit activity efficiency. On the Vicuna benchmark, the Guanaco-65B mannequin outperforms even ChatGPT (GPT-3.5 mannequin) with a a lot smaller parameter dimension.

The very best half is that the 65B mannequin has educated on a single GPU having 48GB of VRAM in simply 24 hours. That reveals how far open-source fashions have are available decreasing price and sustaining high quality. To sum up, if you wish to attempt an offline, native LLM, you possibly can undoubtedly give a shot at Guanaco fashions.

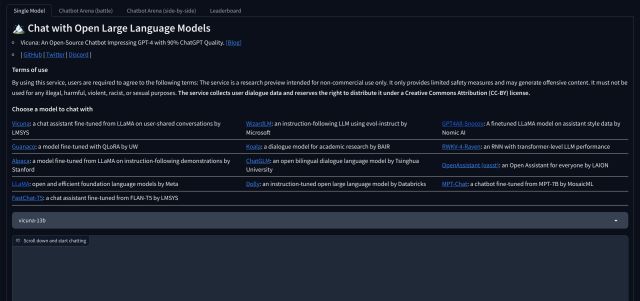

9. Vicuna 33B

Vicuna is one other highly effective open-source LLM that has been developed by LMSYS. It has been derived from LLaMA like many different open-source fashions. It has been fine-tuned utilizing supervised instruction and the coaching information has been collected from sharegpt.com, a portal the place customers share their unimaginable ChatGPT conversations. It’s an auto-regressive giant language mannequin and is educated on 33 billion parameters.

In LMSYS’s personal MT-Bench take a look at, it scored 7.12 whereas one of the best proprietary mannequin, GPT-4 secured 8.99 factors. Within the MMLU take a look at as effectively, it achieved 59.2 factors and GPT-4 scored 86.4 factors. Regardless of being a a lot smaller mannequin, the efficiency of Vicuna is outstanding. You’ll be able to try the demo and work together with the chatbot by clicking on the under hyperlink.

10. MPT-30B

MPT-30B is one other open-source LLM that competes in opposition to LLaMA-derived fashions. It has been developed by Mosaic ML and fine-tuned on a big corpus of knowledge from totally different sources. It makes use of datasets from ShareGPT-Vicuna, Camel-AI, GPTeacher, Guanaco, Baize, and different sources. The very best half about this open-source mannequin is that it has a context size of 8K tokens.

Moreover, it outperforms the GPT-3 mannequin by OpenAI and scores 6.39 in LMSYS’s MT-Bench take a look at. If you’re on the lookout for a small LLM to run domestically, the MPT-30B mannequin is a good selection.

11. 30B-Lazarus

The 30B-Lazarus mannequin has been developed by CalderaAI and it makes use of LLaMA as its foundational mannequin. The developer has used LoRA-tuned datasets from a number of fashions, together with Manticore, SuperCOT-LoRA, SuperHOT, GPT-4 Alpaca-LoRA, and extra. Because of this, the mannequin performs significantly better on many LLM benchmarks. It scored 81.7 in HellaSwag and 45.2 in MMLU, simply after Falcon and Guanaco. In case your use case is generally textual content technology and never conversational chat, the 30B Lazarus mannequin could also be a sensible choice.

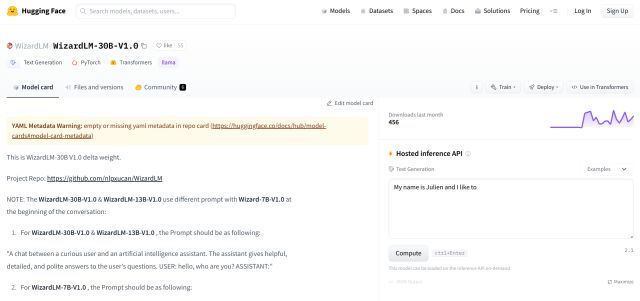

12. WizardLM

WizardLM is our subsequent open-source giant language mannequin that’s constructed to observe complicated directions. A workforce of AI researchers has provide you with an Evol-instruct strategy to rewrite the preliminary set of directions into extra complicated directions. And the generated instruction information is used to fine-tune the LLaMA mannequin.

As a consequence of this strategy, the WizardLM mannequin performs significantly better on benchmarks and customers favor the output from WizardLM greater than ChatGPT responses. Within the MT-Bench take a look at, WizardLM scored 6.35 factors and 52.3 within the MMLU take a look at. Total, for simply 13B parameters, WizardLM does a fairly good job and opens the door for smaller fashions.

Bonus: GPT4All

GPT4ALL is a venture run by Nomic AI. I like to recommend it not only for its in-house mannequin however to run native LLMs in your laptop with none devoted GPU or web connectivity. It has developed a 13B Snoozy mannequin that works fairly effectively. I’ve examined it on my laptop a number of occasions, and it generates responses fairly quick, provided that I’ve an entry-level PC. I’ve additionally used PrivateGPT on GPT4All, and it certainly answered from the customized dataset.

Other than that, it homes 12 open-source fashions from totally different organizations. Most of them are constructed on 7B and 13B parameters and weigh round 3 GB to eight GB. Better of all, you get a GUI installer the place you possibly can choose a mannequin and begin utilizing it instantly. No fidgeting with the Terminal. Merely put, if you wish to run a neighborhood LLM in your laptop in a user-friendly means, GPT4All is one of the simplest ways to do it.