AnythingLLM Lets You Chat With Documents Locally; Here’s How to Use It

To run a neighborhood LLM, you’ve got LM Studio, but it surely doesn’t assist ingesting native paperwork. There may be GPT4ALL, however I discover it a lot heavier to make use of and PrivateGPT has a command-line interface which isn’t appropriate for common customers. So comes AnythingLLM, in a slick graphical consumer interface that means that you can feed paperwork regionally and chat along with your recordsdata, even on consumer-grade computer systems. I used it extensively and located AnythingLLM significantly better than different options. Right here is how you should use it.

Observe:

AnythingLLM runs on finances computer systems as properly, leveraging each CPU and GPU. I examined it on an Intel i3 Tenth-gen processor with a low-end Nvidia GT 730 GPU. That mentioned, token era might be gradual. When you have a robust laptop, it will be a lot quicker at producing output.

Obtain and Set Up AnythingLLM

- Go forward and obtain AnythingLLM from here. It’s freely out there for Home windows, macOS, and Linux.

- Subsequent, run the setup file and it’ll set up AnythingLLM. This course of might take a while.

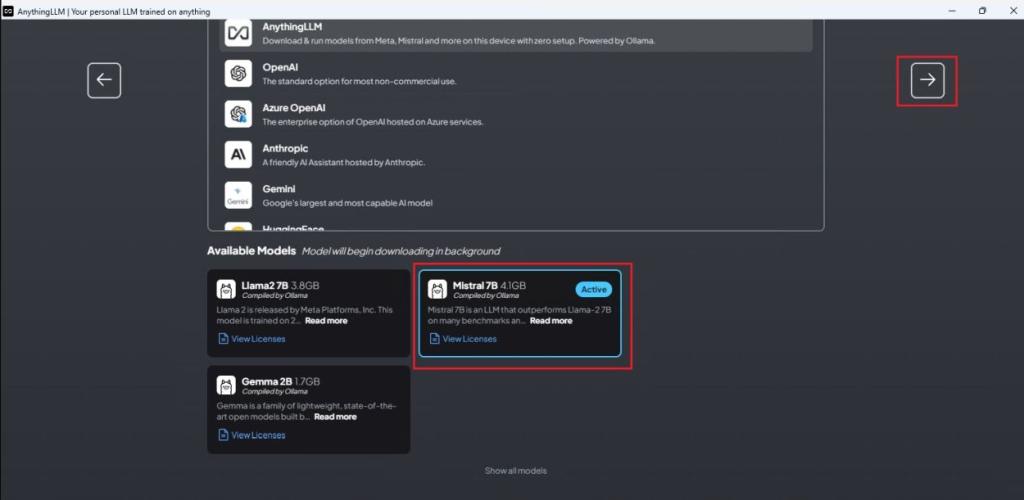

- After that, click on on “Get began” and scroll right down to select an LLM. I’ve chosen “Mistral 7B”. You’ll be able to obtain even smaller fashions from the listing beneath.

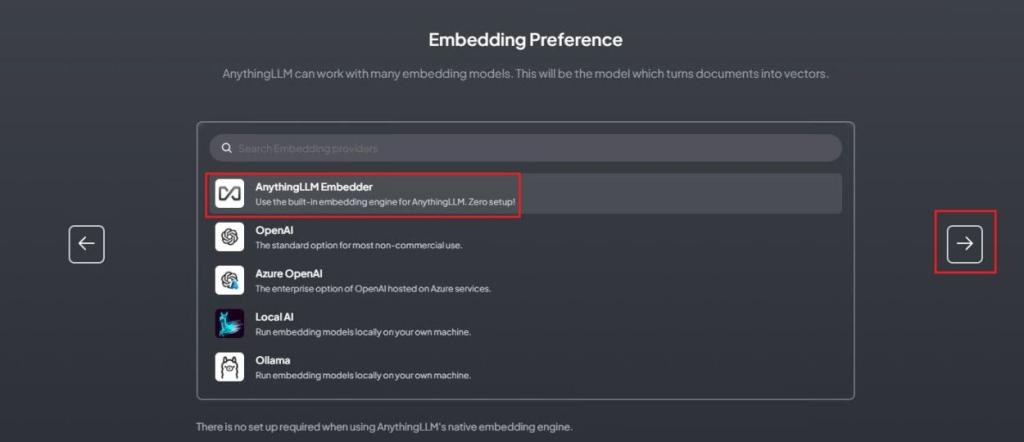

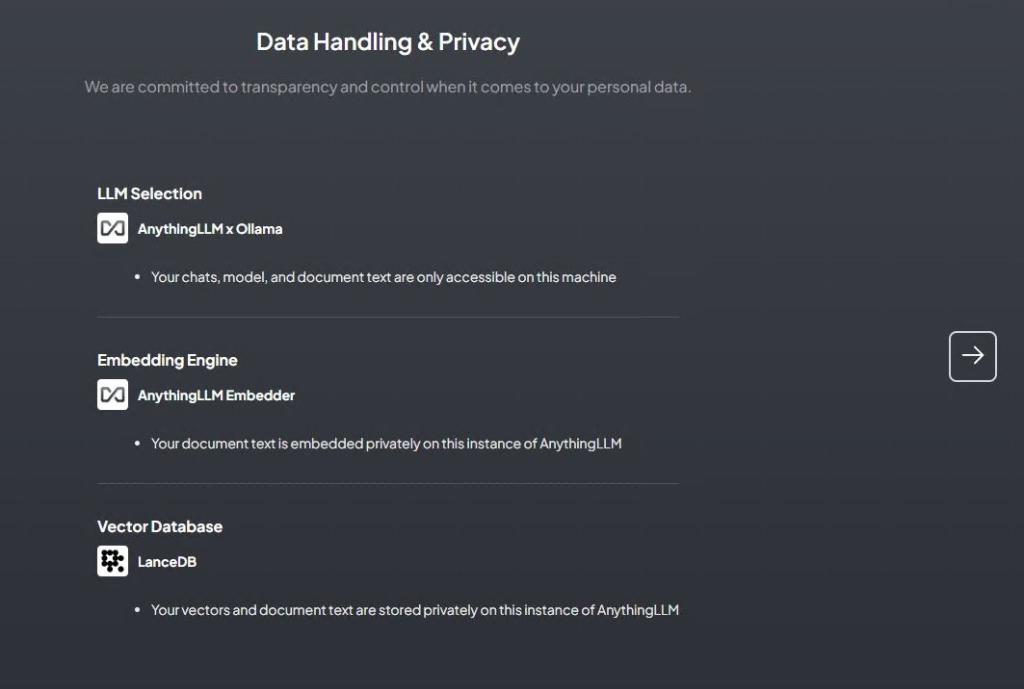

- Subsequent, select “AnythingLLM Embedder” because it requires no handbook setup.

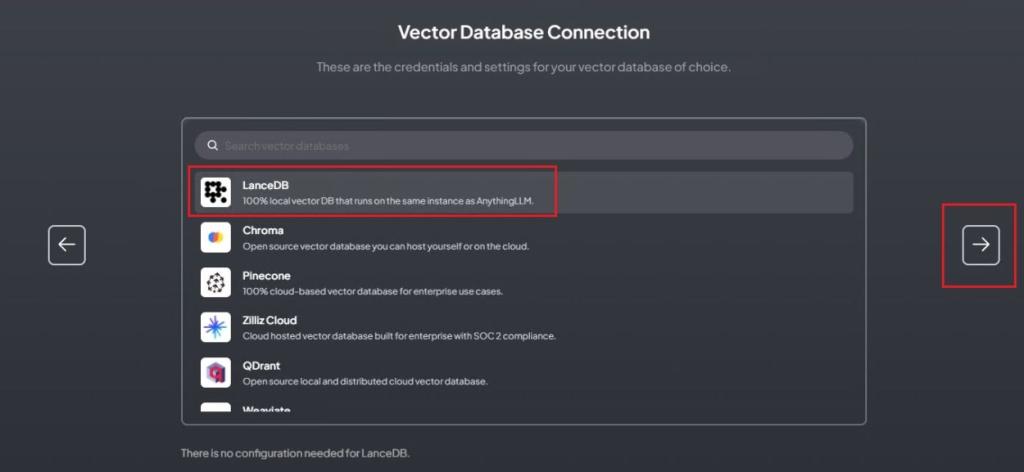

- After that, choose “LanceDB” which is a neighborhood vector database.

- Lastly, assessment your picks and fill out the data on the subsequent web page. You may as well skip the survey.

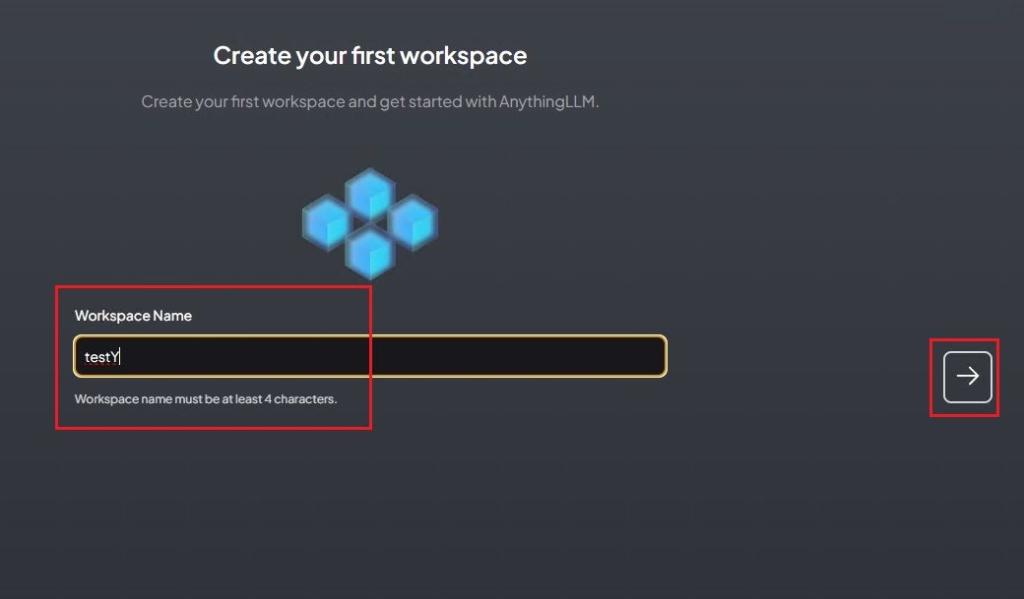

- Now, set a reputation to your workspace.

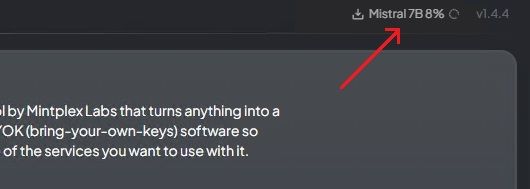

- You’re nearly accomplished. You’ll be able to see that Mistral 7B is being downloaded within the background. As soon as the LLM is downloaded, transfer to the subsequent step.

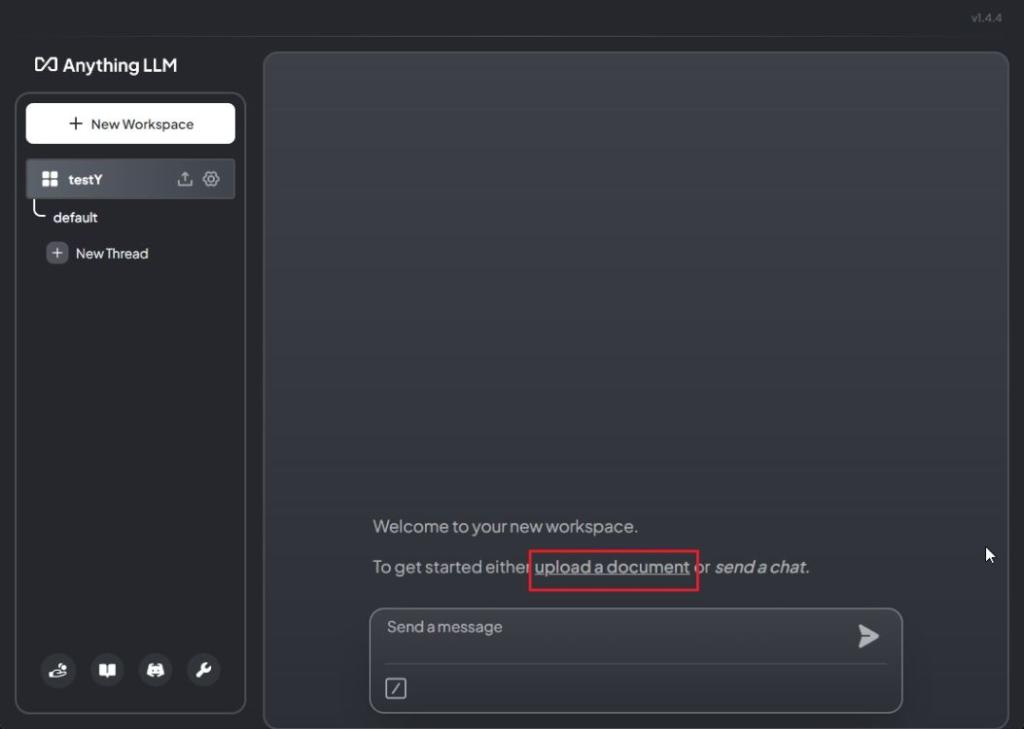

Add Your Paperwork and Chat Regionally

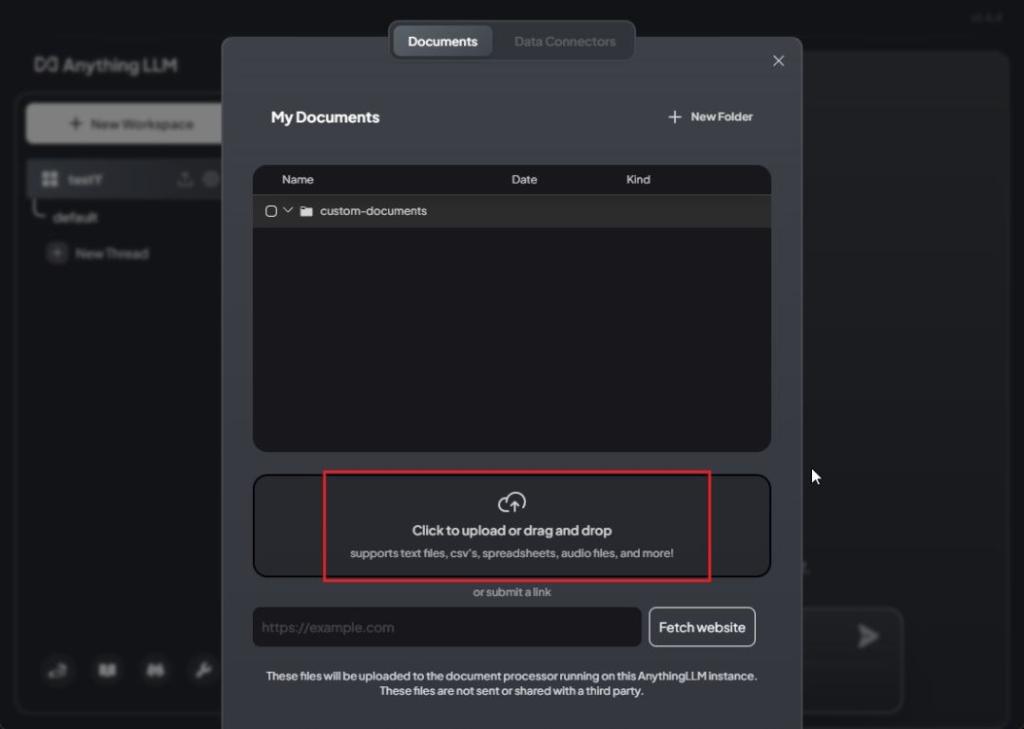

- To start with, click on on “Add a doc“.

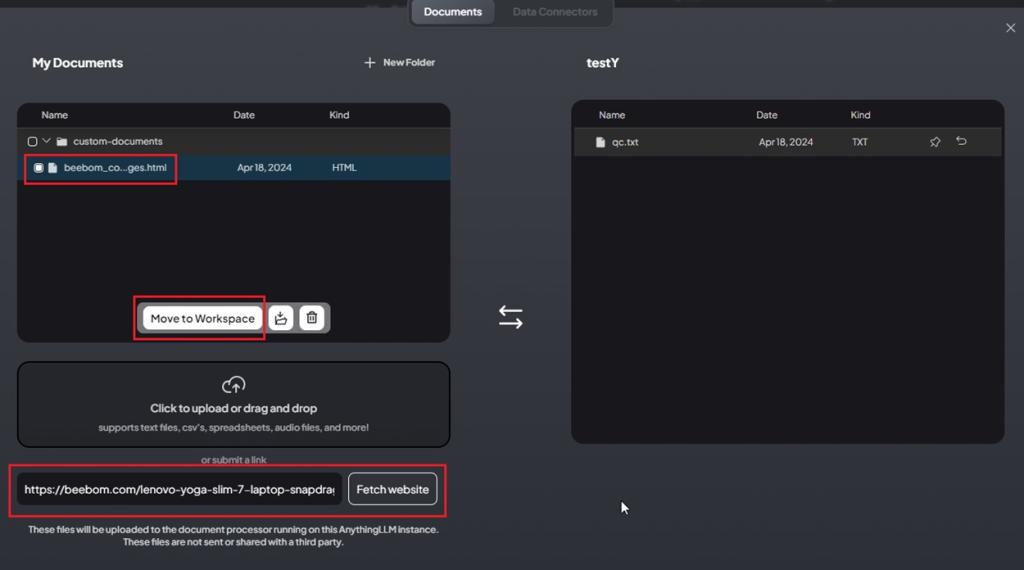

- Now, click on to add your file or drag and drop them. You’ll be able to add any file format together with PDF, TXT, CSV, audio recordsdata, and many others.

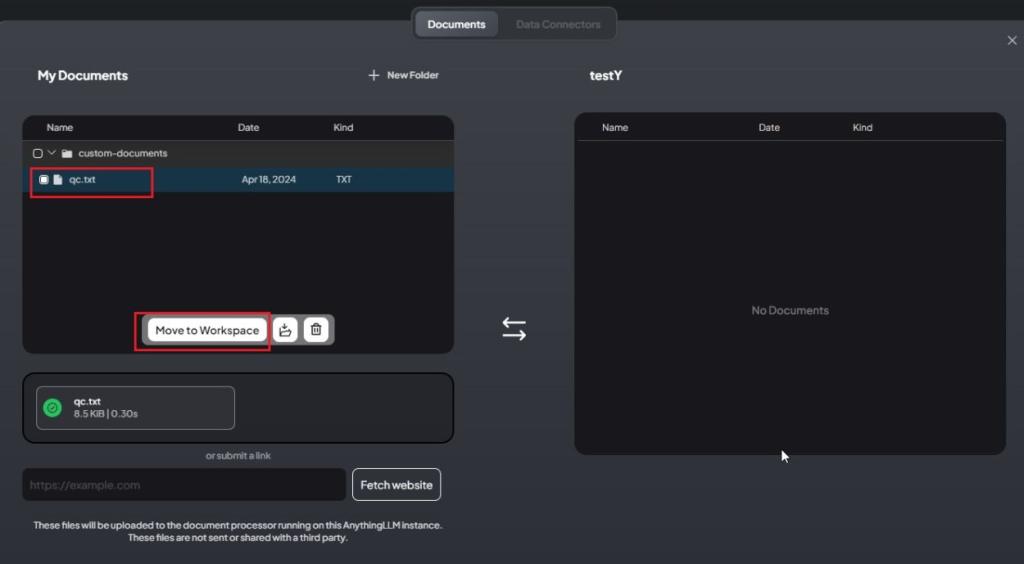

- I’ve uploaded a TXT file. Now, choose the uploaded file and click on on “Transfer to Workspace“.

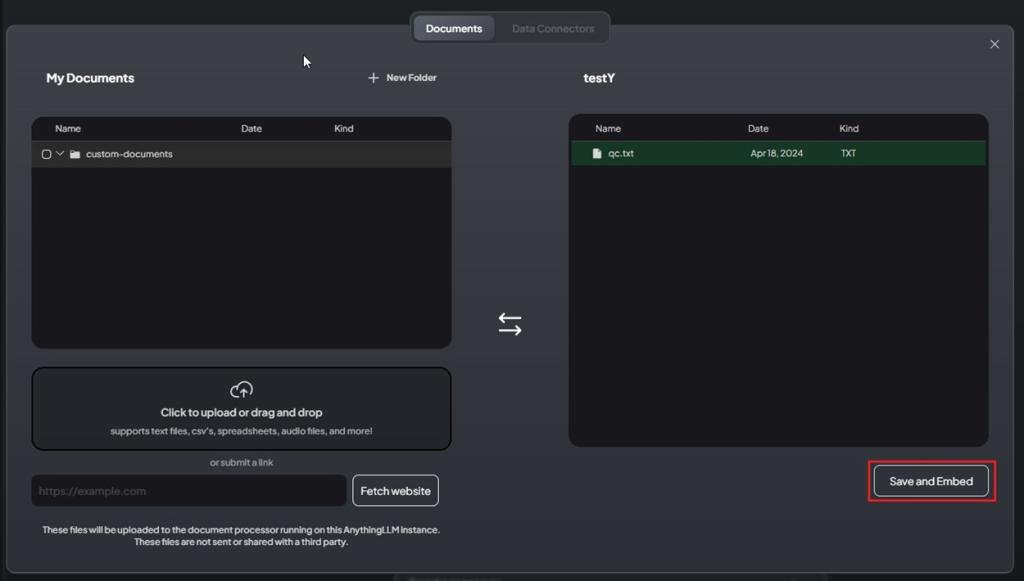

- Subsequent, click on on “Save and Embed“. After that, shut the window.

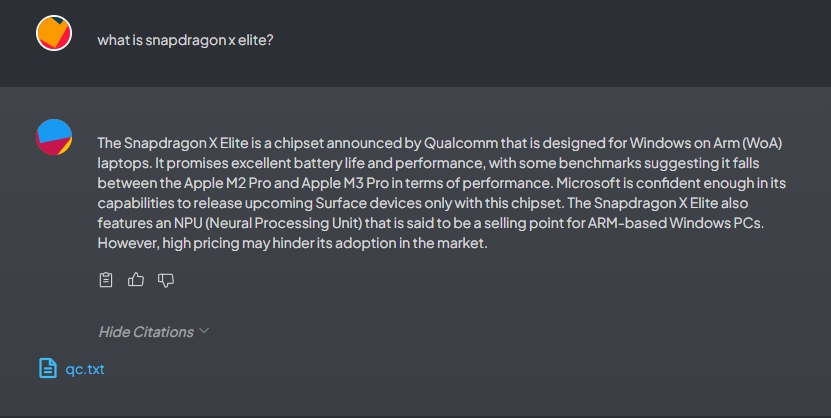

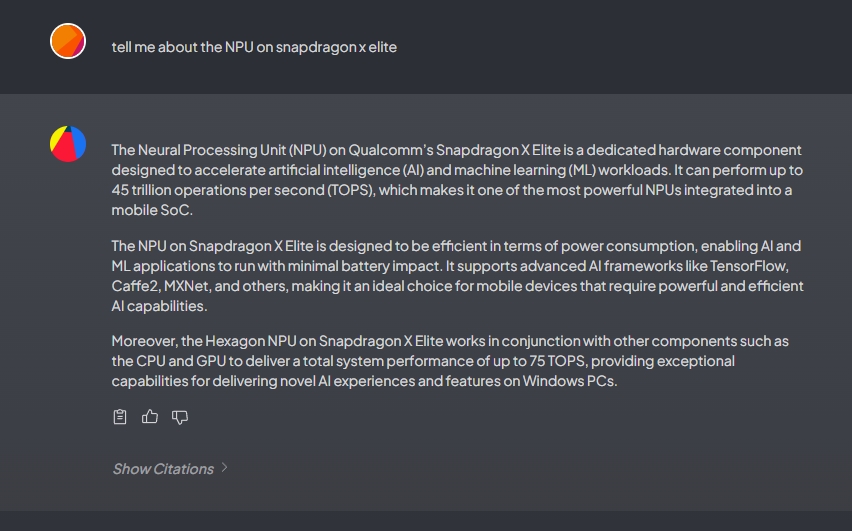

- Now you can begin chatting along with your paperwork regionally. As you possibly can see, I requested a query from the TXT file, and it gave an accurate reply citing the textual content file.

- I additional requested some questions, and it responded precisely.

- One of the best half is which you can additionally add a web site URL and it’ll fetch the content material from the web site. Now you can begin chatting with the LLM.

So that is how one can ingest your paperwork and recordsdata regionally and chat with the LLM securely. No must add your personal paperwork on cloud servers which have sketchy privateness insurance policies. Nvidia has launched an analogous program known as Chat with RTX, but it surely solely works with high-end Nvidia GPUs. AnythingLLM brings native inferencing even on consumer-grade computer systems, making the most of each CPU and GPU on any silicon.