I Got Access to Gemini 1.5 Pro, and It’s Better Than GPT-4 and Gemini 1.0 Ultra

Google introduced the subsequent era of the Gemini mannequin, Gemini 1.5 Professional, two weeks in the past, and we lastly obtained entry to a 1 million token context window on the highly-anticipated mannequin this morning. So, I dropped all my work for the day, texted my Editor I used to be testing the brand new Gemini mannequin, and started working.

Earlier than I present my comparability outcomes for Gemini 1.5 Professional vs GPT-4 and Gemini 1.0 Extremely, let’s go over the fundamentals of the brand new Gemini 1.5 Professional mannequin.

What Is the Gemini 1.5 Professional AI Mannequin?

The Gemini 1.5 Professional mannequin seems to be a outstanding multimodal LLM from Google’s steady after months of ready. In contrast to the standard dense mannequin upon which the Gemini 1.0 household fashions have been constructed, the Gemini 1.5 Professional mannequin makes use of a Combination-of-Specialists (MoE) structure.

Curiously, the MoE structure is additionally employed by OpenAI on the reigning king, the GPT-4 mannequin.

However that isn’t all, the Gemini 1.5 Professional can deal with a large context size of 1 million tokens, excess of GPT-4 Turbo’s 128K and Claude 2.1’s 200K token context size. Google has additionally examined the mannequin internally with as much as 10 million tokens, and the Gemini 1.5 Professional mannequin has been in a position to ingest large quantities of information showcasing nice retrieval functionality.

Google additionally says that regardless of Gemini 1.5 Professional being smaller than the biggest Gemini 1.0 Extremely mannequin (accessible through Gemini Superior), it performs broadly on the identical degree. So to guage all of the tall claims, we could?

Gemini 1.5 Professional vs Gemini 1.0 Extremely vs GPT-4 Comparability

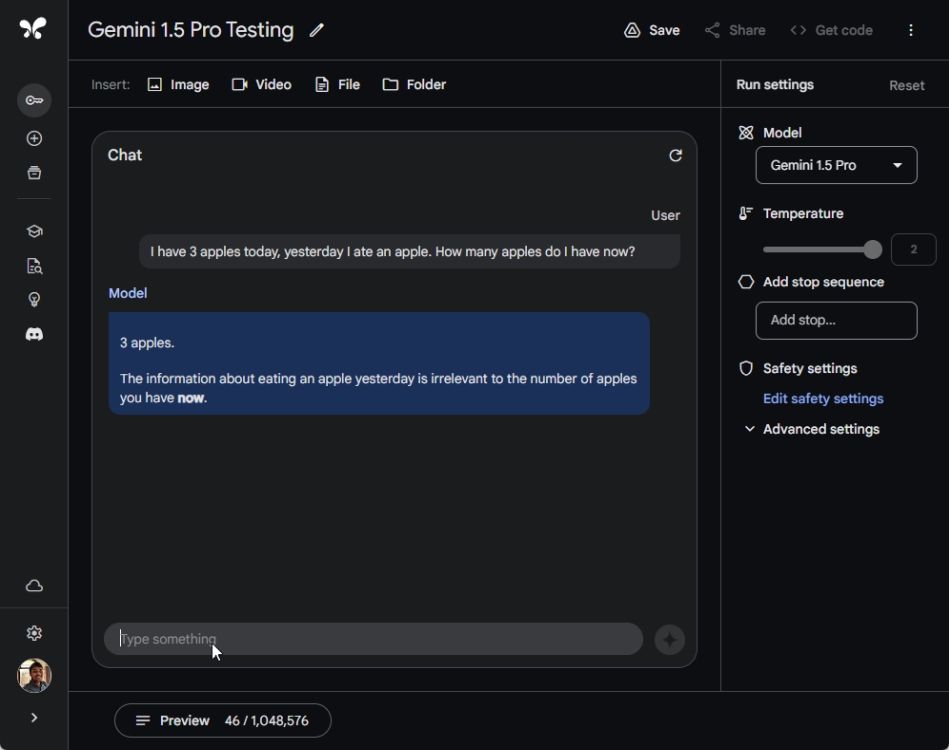

1. The Apple Take a look at

In my earlier Gemini 1.0 Extremely and GPT-4 comparability, Google misplaced to OpenAI in the usual Apple check, which checks the logical reasoning of LLMs. Nevertheless, the newly-released Gemini 1.5 Professional mannequin accurately solutions the query, which means Google has certainly improved superior reasoning on the Gemini 1.5 Professional mannequin.

Google is again within the recreation! And like earlier, GPT-4 responded with an accurate reply and Gemini 1.0 Extremely nonetheless gave an incorrect response, saying you’ve got 2 apples left.

I've 3 apples right this moment, yesterday I ate an apple. What number of apples do I've now?

Winner: Gemini 1.5 Professional and GPT-4

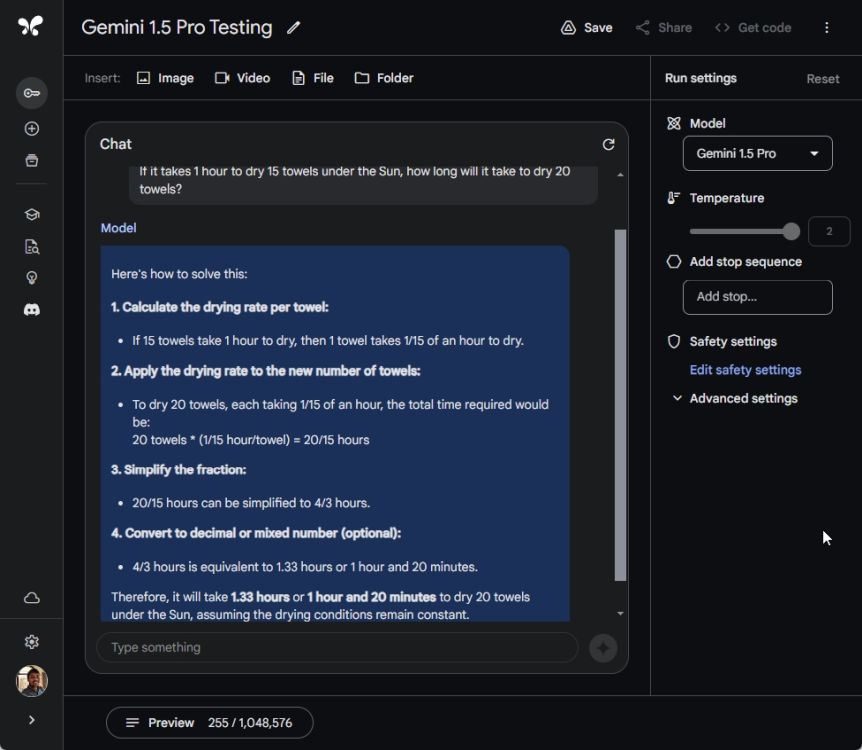

2. The Towel Query

In one other check to guage the superior reasoning functionality of Gemini 1.5 Professional, I requested the favored towel query. Sadly, all three fashions obtained it incorrect, together with Gemini 1.5 Professional, Gemini 1.0 Extremely, and GPT-4.

None of those AI fashions understood the fundamental premise of the query and computed solutions utilizing maths, coming to an incorrect conclusion. It’s nonetheless a good distance earlier than AI fashions can motive the identical as people.

If it takes 1 hour to dry 15 towels below the Solar, how lengthy will it take to dry 20 towels?

Winner: None

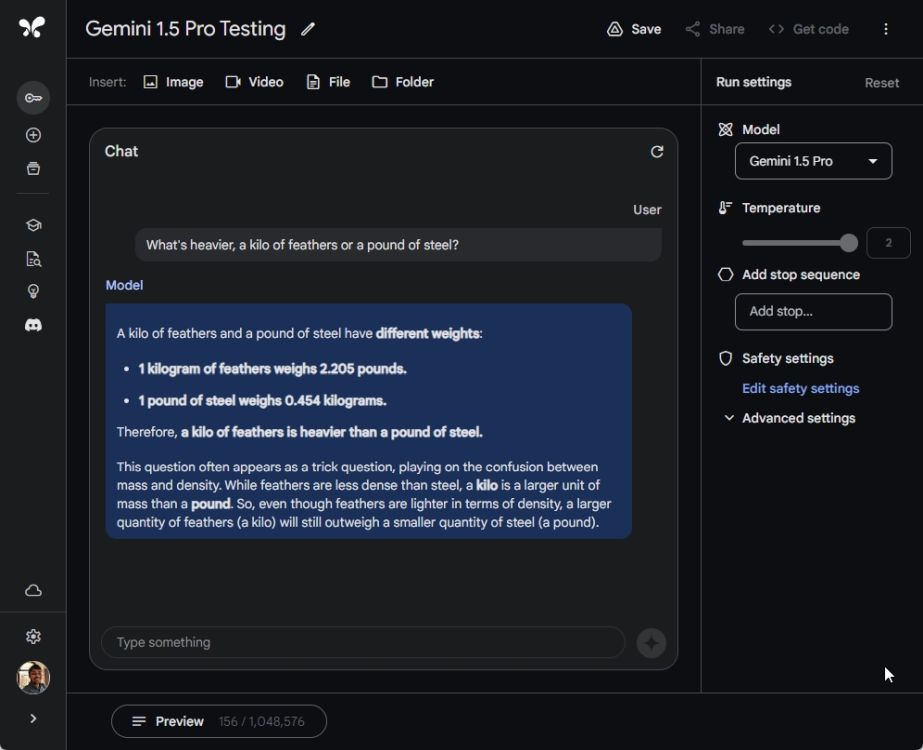

3. Which is Heavier

I then ran a modified model of the burden analysis check to test the advanced reasoning functionality of Gemini 1.5 Professional, and it handed efficiently together with GPT-4. Nevertheless, Gemini 1.0 Extremely failed the check once more.

Each Gemini 1.5 Professional and GPT-4 accurately recognized the items, with out delving into density, and mentioned a kilo of any materials together with feathers will all the time weigh heavier than a pound of metal or something. Nice job Google!

What's heavier, a kilo of feathers or a pound of metal?

Winner: Gemini 1.5 Professional and GPT-4

Beneficial Articles

Meet Groq, a Lightning Quick AI Accelerator that Beats ChatGPT and Gemini

Feb 22, 2024

You Can Now Set Copilot Because the Default Assistant on Android; Right here’s How

Feb 28, 2024

4. Remedy a Maths Downside

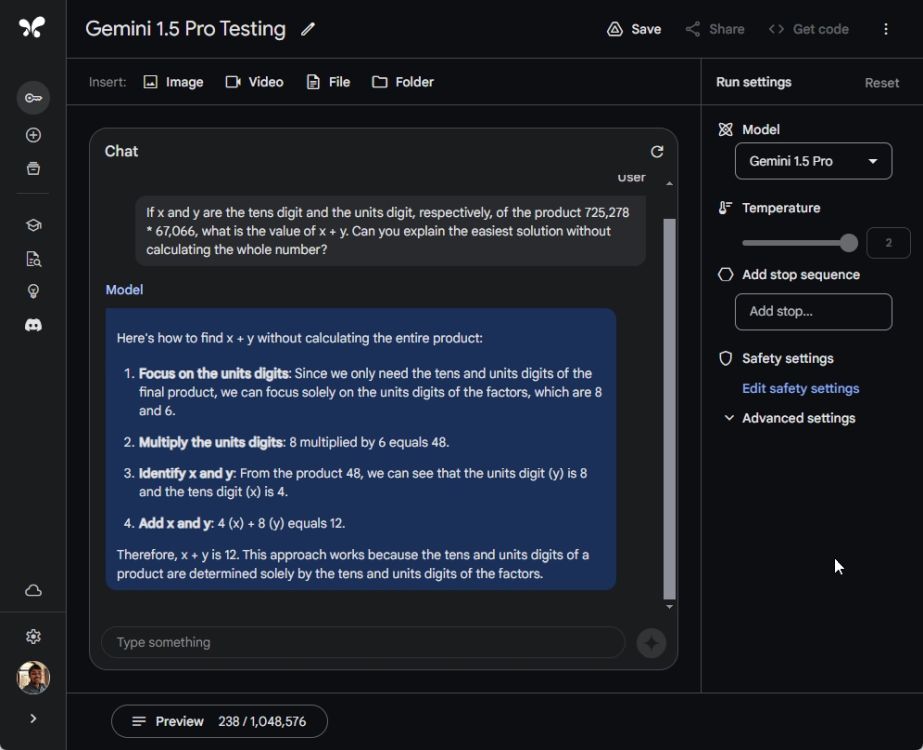

Courtesy of Maxime Labonne, I borrowed and ran one in every of his math prompts to guage Gemini 1.5 Professional’s mathematical prowess. And properly, Gemini 1.5 Professional handed the check with flying colours.

I ran the identical check on GPT-4 as properly, and it additionally got here up with the correct reply. However we already knew GPT is sort of succesful. By the way in which, I explicitly requested GPT-4 to keep away from utilizing the Code Interpreter plugin for mathematical calculations. And unsurprisingly, Gemini 1.0 Extremely failed the check and gave a incorrect output. I imply, why am I even together with Extremely on this check? (sighs and strikes to the subsequent immediate)

If x and y are the tens digit and the items digit, respectively, of the product 725,278 * 67,066, what's the worth of x + y. Are you able to clarify the simplest resolution with out calculating the entire quantity?

Winner: Gemini 1.5 Professional and GPT-4

5. Comply with Person Directions

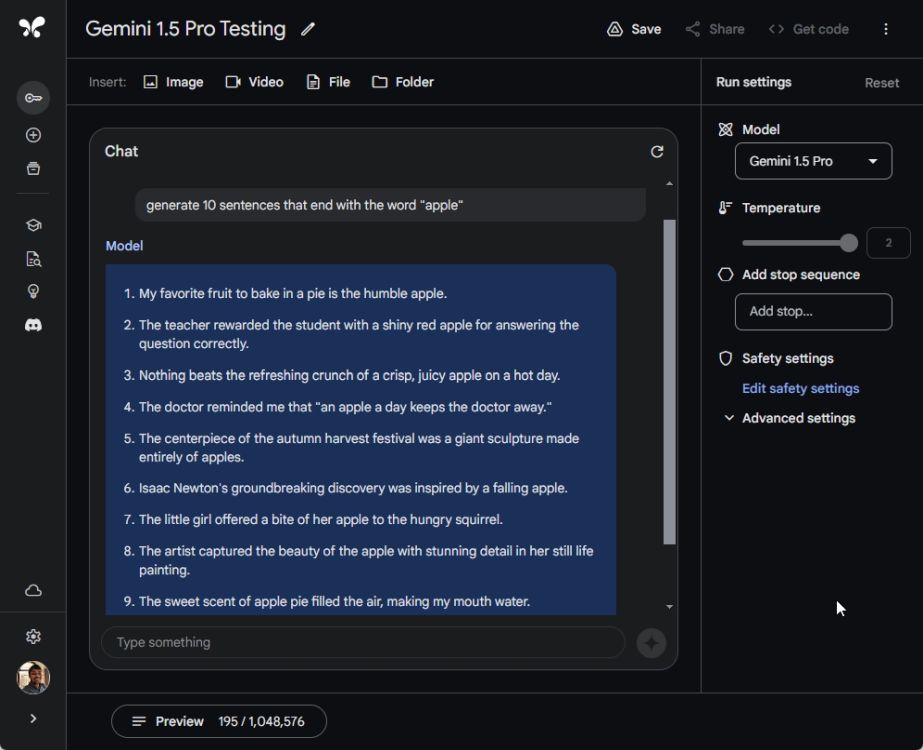

Subsequent, we moved to a different check the place we evaluated whether or not Gemini 1.5 Professional might correctly observe consumer directions. We requested it to generate 10 sentences that finish with the phrase “apple”.

Gemini 1.5 Professional failed this check miserably, solely producing three such sentences whereas GPT-4 produced 9 such sentences. Gemini 1.0 Extremely might solely generate two sentences ending with the phrase “apple.”

generate 10 sentences that finish with the phrase "apple"

Winner: GPT-4

6. Needle in a Haystack (NIAH) Take a look at

The headline characteristic of Gemini 1.5 Professional is that it might deal with a enormous context size of 1 million tokens. Google has already accomplished extensive tests on NIAH and it obtained 99% retrieval with unimaginable accuracy. So naturally, I additionally did an identical check.

I took one of many longest Wikipedia articles (Spanish Conquest of Petén), which has almost 100,000 characters and consumes round 24,000 tokens. I inserted a needle (a random assertion) in the course of the textual content to make it tougher for AI fashions to retrieve the assertion.

Researchers have proven that AI fashions carry out worse in an extended context window if the needle is inserted within the center.

Gemini 1.5 Professional flexed its muscle tissue and accurately answered the query with nice accuracy and context. Nevertheless, GPT-4 couldn’t discover the needle from the big textual content window. And properly, Gemini 1.0 Extremely, which is out there through Gemini Superior, at present helps a context window of round 8K tokens, a lot lower than the marketed declare of 32K-context size. However, we ran the check with 8K tokens but, Gemini 1.0 Extremely failed to search out the textual content assertion.

So yeah, for lengthy context retrieval, the Gemini 1.5 Professional mannequin is the reigning king, and Google has surpassed all of the AI fashions on the market.

Winner: Gemini 1.5 Professional

7. Multimodal Video Take a look at

Whereas GPT-4 is a multimodal mannequin, it might’t course of movies but. Gemini 1.0 Extremely is a multimodal mannequin as properly, however Google has not unlocked the characteristic for the mannequin but. So, you’ll be able to’t add a video on Gemini Superior.

That mentioned, Gemini 1.5 Professional, which I’m accessing through Google AI Studio (visit), permits you to add movies as properly, in addition to varied recordsdata, pictures, and even folders consisting of various file sorts. So I uploaded a 5-minute Beebom video (1080p, 65MB) of the OnePlus Watch 2 review, which is definitely not a part of the coaching knowledge.

The mannequin took a minute to course of the video and consumed round 75,000 tokens out of 1,048,576 tokens (lower than 10%).

Now, I threw questions at Gemini 1.5 Professional beginning with what the video is about. I additionally requested it to show all the important thing options of the watch. It took shut to twenty seconds to reply every query. And the solutions have been spot on with none signal of hallucination. Subsequent, I requested the place is the reviewer sitting, and it gave an in depth reply. After that, I requested what’s the colour of the watch band and it mentioned: “inexperienced”. Nicely accomplished!

Lastly, I requested Gemini Professional to generate a transcript of the video and the mannequin precisely generated the transcript inside a minute. I’m blown away by Gemini 1.5 Professional’s multimodal functionality. It was in a position to efficiently analyze each body of the video and infer which means intelligently.

This makes Gemini 1.5 Professional a strong multimodal mannequin, surpassing all the things we’ve seen to date. As Simon Willison places it in his blog, video is the killer app of Gemini 1.5 Professional.

Winner: Gemini 1.5 Professional

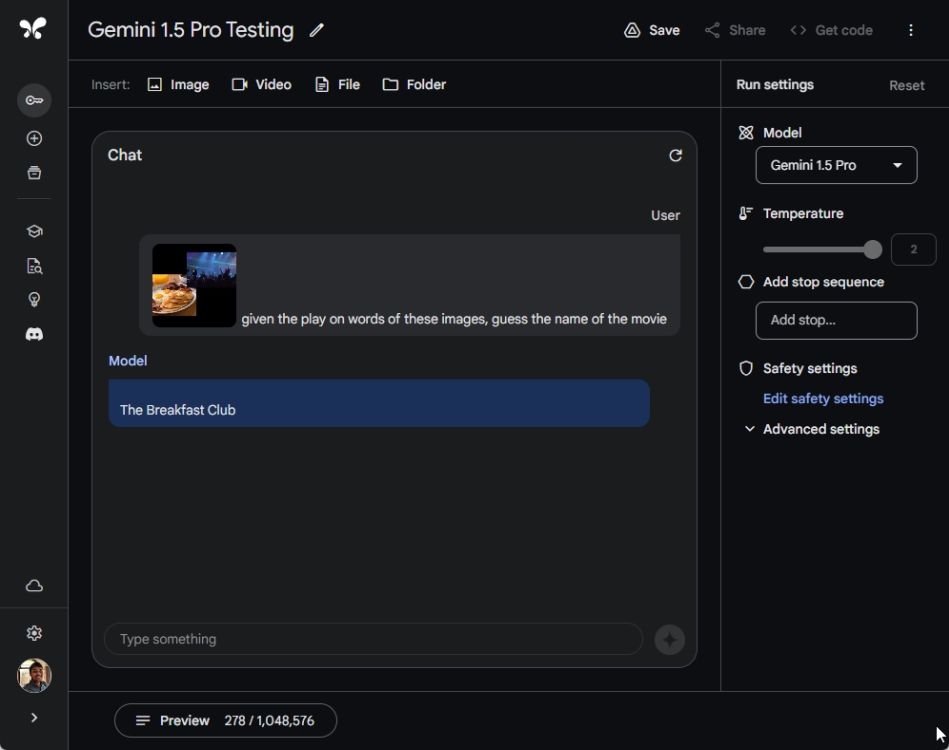

8. Multimodal Picture Take a look at

In my last check, I examined the imaginative and prescient functionality of the Gemini 1.5 Professional mannequin. I uploaded a nonetheless from Google’s demo (video), which was introduced throughout the Gemini 1.0 launch. In my earlier check, Gemini 1.0 Extremely failed the picture evaluation check as a result of Google has but to allow the multimodal characteristic for the Extremely mannequin on Gemini Superior.

However, the Gemini 1.5 Professional mannequin shortly generated a response and accurately answered the film title, “The Breakfast Membership“. GPT-4 additionally gave an accurate response. And Gemini 1.0 Extremely couldn’t course of the picture in any respect, citing the picture has faces of individuals, which surprisingly wasn’t the case.

Winner: Gemini 1.5 Professional and GPT-4

Knowledgeable Opinion: Google Lastly Delivers with Gemini 1.5 Professional

After enjoying with Gemini 1.5 Professional all day, I can say that Google has lastly delivered. The search large has developed an immensely highly effective multimodal mannequin on the MoE structure which is on par with OpenAI’s GPT-4 mannequin.

It excels in commonsense reasoning and is even higher than GPT-4 in a number of circumstances, together with long-context retrieval, multimodal functionality, video processing, and assist for varied file codecs. Don’t overlook that we’re speaking a few mid-size Gemini 1.5 Professional mannequin. When the Gemini 1.5 Extremely mannequin drops sooner or later, it will likely be much more spectacular.

In fact, Gemini 1.5 Professional is nonetheless in preview and at present accessible to builders and researchers solely to check and consider the mannequin. Earlier than a wider public rollout through Gemini Superior, Google could add further guardrails which can nerf the mannequin’s efficiency, however I hope this gained’t be the case this time.

Additionally, keep in mind, when the 1.5 Professional mannequin goes public, customers gained’t get a large context window of 1 million tokens. Google has mentioned the mannequin comes with a customary 128,000 token context size which remains to be enormous. Builders can, in fact, leverage the 1 million context window to create distinctive merchandise for end-users.

Following the Gemini announcement, Google has additionally launched a household of light-weight Gemma fashions below an open-source license. Extra just lately, the corporate was embroiled in an argument surrounding Gemini’s AI picture era fiasco, so do give it a learn as properly.

Now, what do you consider Gemini 1.5 Professional’s efficiency? Are you excited that Google is lastly again within the AI race and poised to problem OpenAI, which just lately introduced Sora, its AI text-to-video era mannequin? Tell us your opinion within the remark part beneath.