ChatGPT 4o vs Gemini 1.5 Pro: It’s Not Even Close

OpenAI launched its flagship GPT-4o mannequin on the Spring Replace occasion and made it free for everybody. Simply after a day, on the Google I/O 2024 occasion, Google debuted the Gemini 1.5 Professional mannequin for customers by way of Gemini Superior. Now that two flagship fashions can be found for customers, let’s examine ChatGPT 4o and Gemini 1.5 Professional and see which one does a greater job. On that word, let’s start.

Observe:

To make sure consistency, now we have carried out all our checks on Google AI Studio and Gemini Superior. Each host the most recent Gemini 1.5 Professional mannequin.

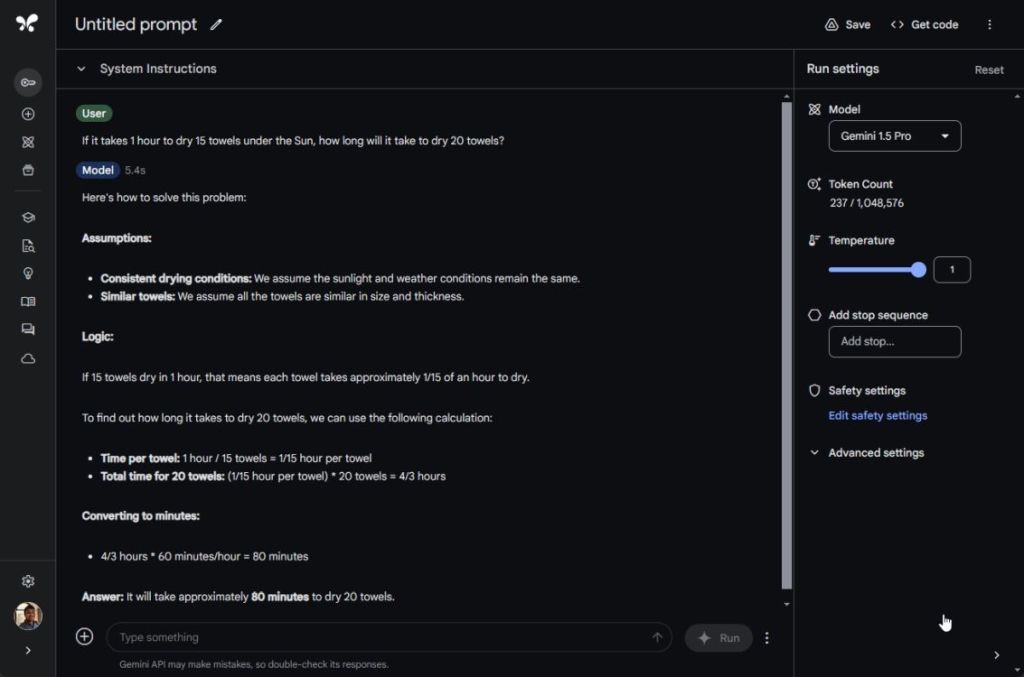

1. Calculate Drying Time

We ran the basic reasoning take a look at on ChatGPT 4o and Gemini 1.5 Professional to check their intelligence. OpenAI’s ChatGPT 4o aced it whereas the improved Gemini 1.5 Professional mannequin struggled to know the trick query. It dabbled into mathematical calculations, coming to a unsuitable conclusion.

If it takes 1 hour to dry 15 towels beneath the Solar, how lengthy will it take to dry 20 towels?

Winner: ChatGPT 4o

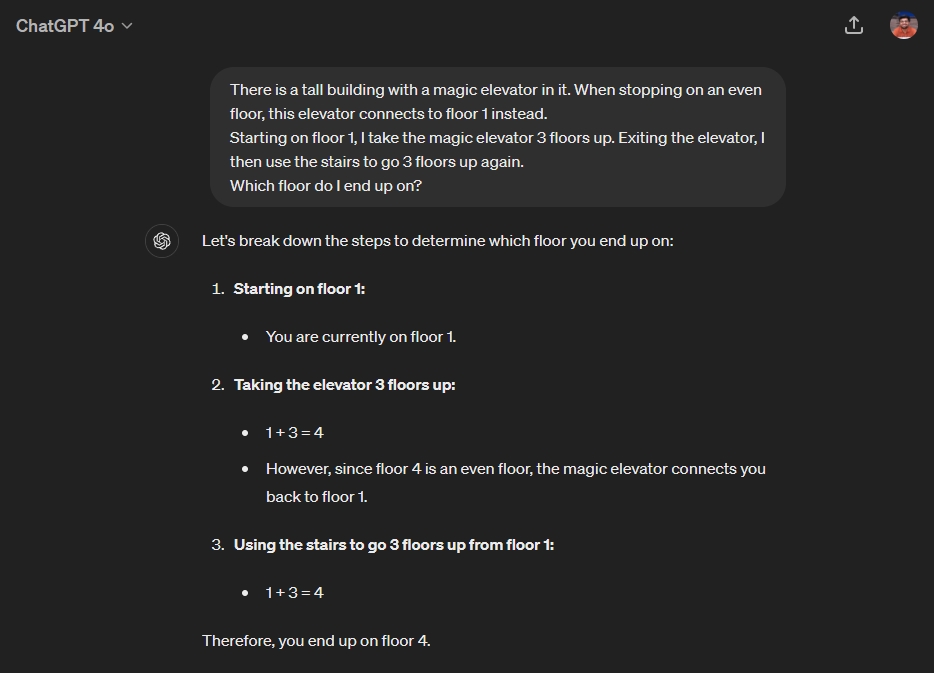

2. Magic Elevator Check

Within the magic elevator take a look at, the sooner ChatGPT 4 mannequin had did not appropriately guess the reply. Nevertheless, this time, the ChatGPT 4o mannequin responded with the appropriate reply. Gemini 1.5 Professional additionally generated the appropriate reply.

There's a tall constructing with a magic elevator in it. When stopping on a good flooring, this elevator connects to flooring 1 as an alternative.

Beginning on flooring 1, I take the magic elevator 3 flooring up. Exiting the elevator, I then use the steps to go 3 flooring up once more.

Which flooring do I find yourself on?

Winner: ChatGPT 4o and Gemini 1.5 Professional

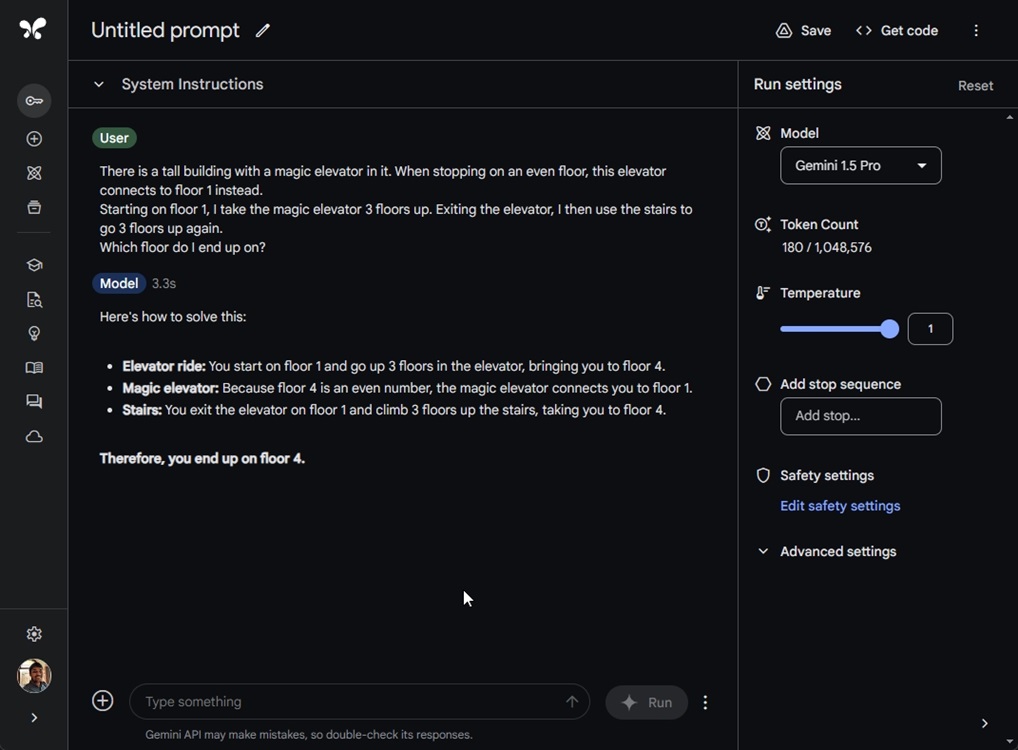

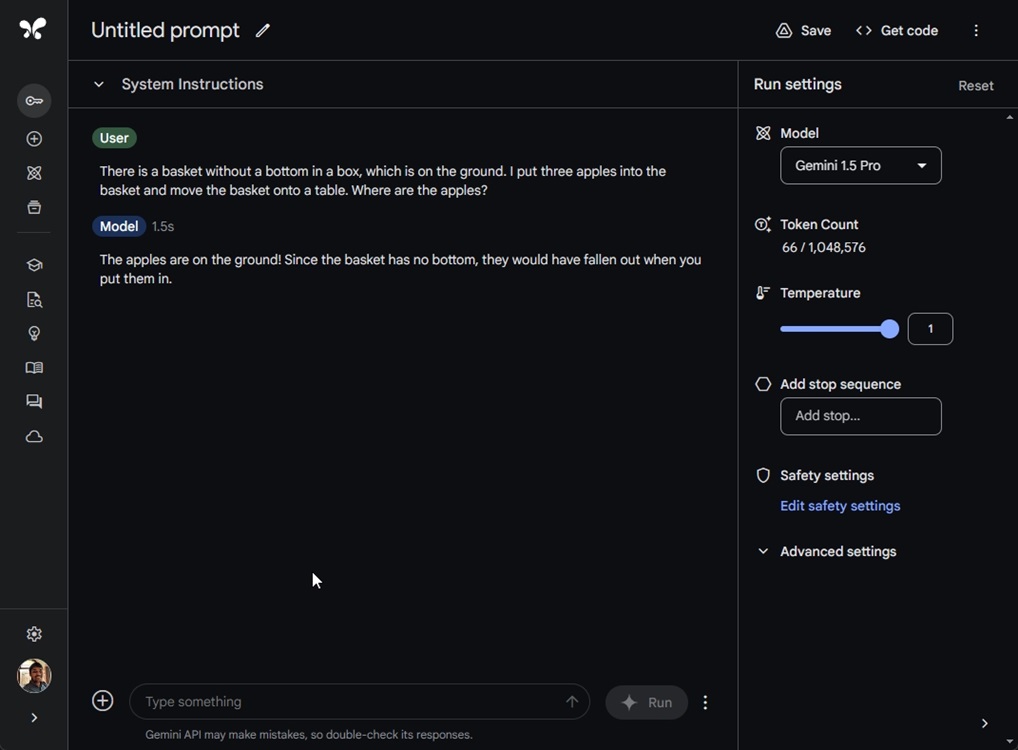

3. Find the Apple

On this take a look at, Gemini 1.5 Professional outrightly failed to know the nuances of the query. It appears the Gemini mannequin is just not attentive and overlooks many key elements of the query. Then again, ChatGPT 4o appropriately says that the apples are within the field on the bottom. Kudos OpenAI!

There's a basket with out a backside in a field, which is on the bottom. I put three apples into the basket and transfer the basket onto a desk. The place are the apples?

Winner: ChatGPT 4o

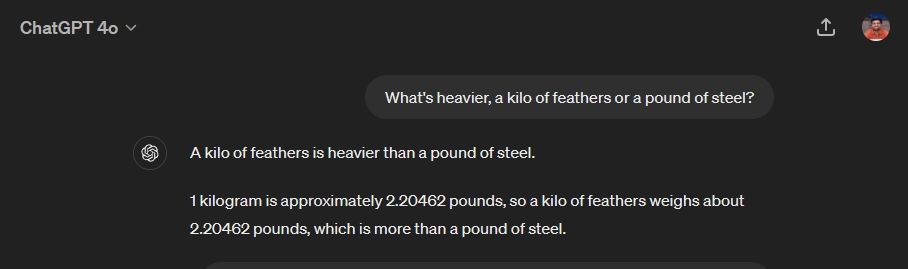

4. Which is Heavier?

On this commonsense reasoning take a look at, Gemini 1.5 Professional will get the reply unsuitable and says each weigh the identical. However ChatGPT 4o rightly factors out that the models are totally different, therefore, a kg of any materials will weigh greater than a pound. It appears just like the improved Gemini 1.5 Professional mannequin has gotten dumber over time.

What's heavier, a kilo of feathers or a pound of metal?

Winner: ChatGPT 4o

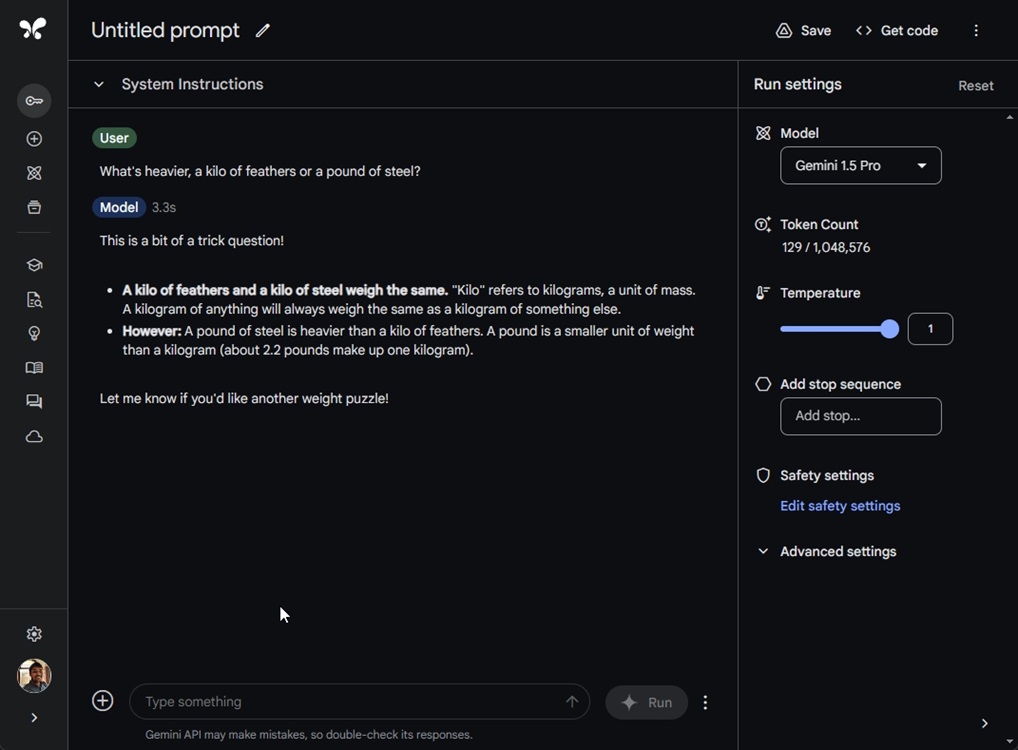

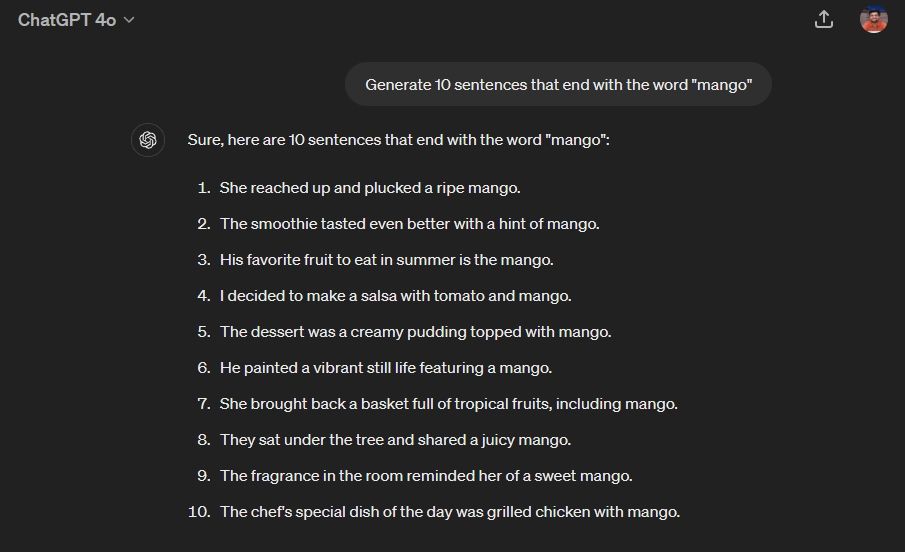

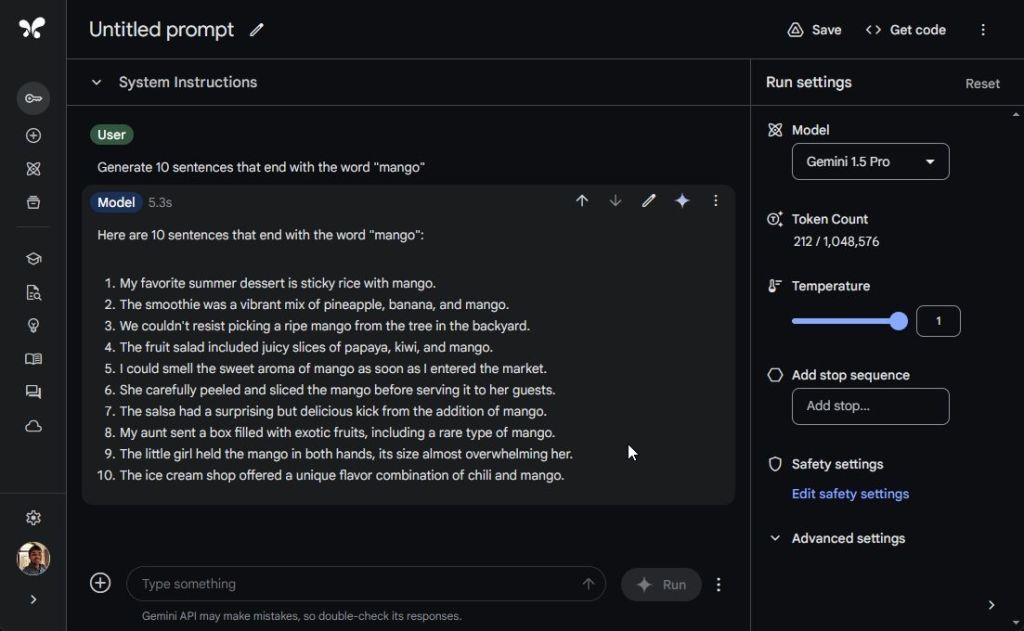

5. Comply with Consumer Directions

I requested ChatGPT 4o and Gemini 1.5 Professional to generate 10 sentences ending with the phrase “mango”. Guess what? ChatGPT 4o generated all 10 sentences appropriately, however Gemini 1.5 Professional might solely generate 6 such sentences.

Previous to GPT-4o, solely Llama 3 70B was able to properly follow user instructions. The older GPT-4 model was also struggling earlier. It means OpenAI has indeed improved its model.

Generate 10 sentences that end with the word "mango"

Winner: ChatGPT 4o

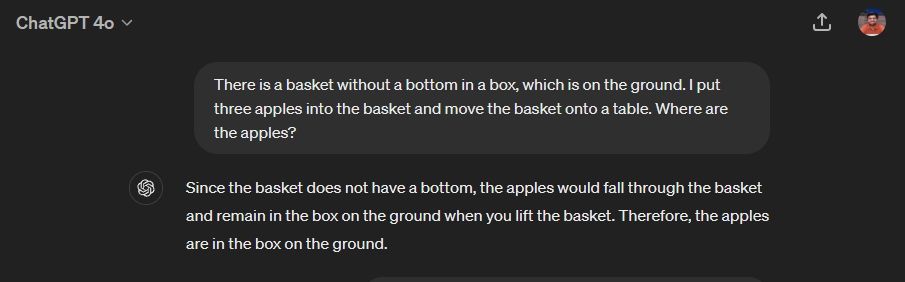

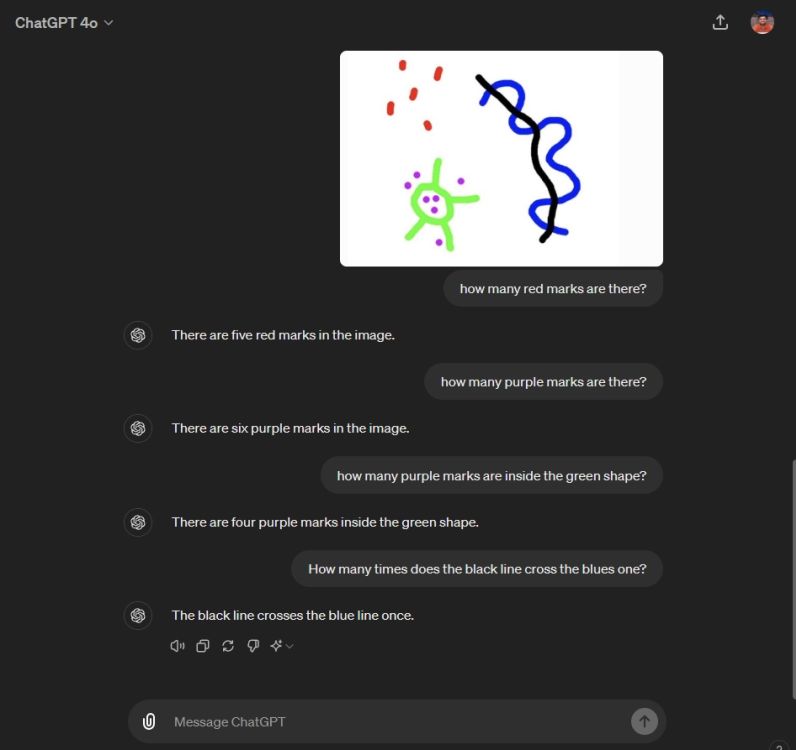

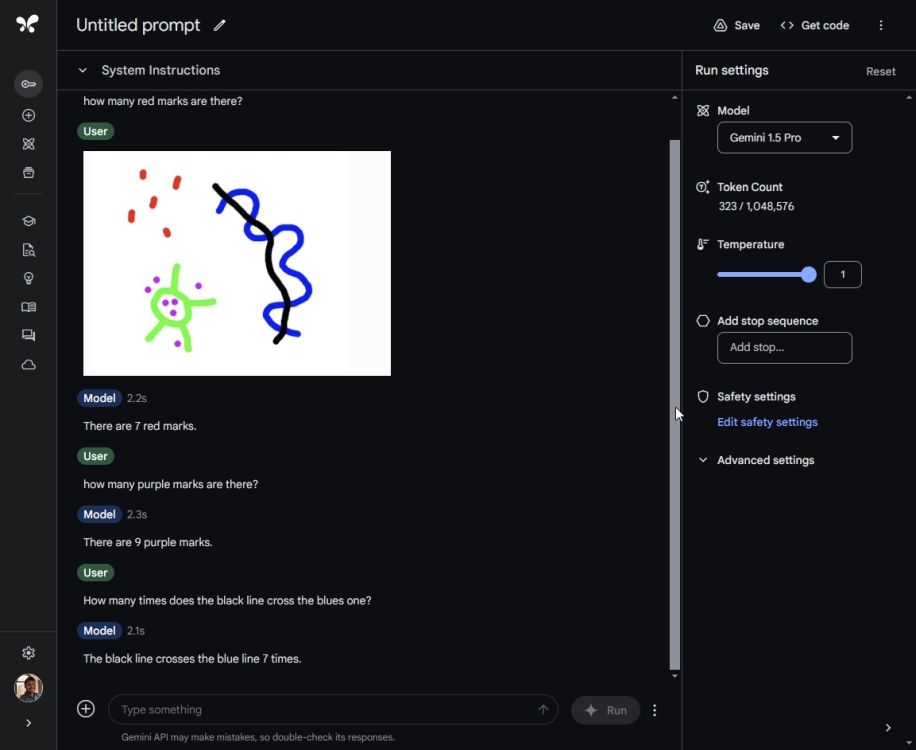

6. Multimodal Image Test

François Fleuret, author of The Little Book of Deep Learning, performed a simple image analysis test on ChatGPT 4o and shared the outcomes on X (previously Twitter). He has now deleted the tweet to keep away from blowing the difficulty out of proportion since he says, it’s a normal concern with imaginative and prescient fashions.

That mentioned, I carried out the identical take a look at on Gemini 1.5 Professional and ChatGPT 4o from my finish to breed the outcomes. Gemini 1.5 Professional carried out a lot worse and gave unsuitable solutions for all questions. ChatGPT 4o, then again, gave one proper reply however failed on different questions.

It goes on to indicate that there are numerous areas the place multimodal fashions want enhancements. I’m significantly disenchanted with Gemini’s multimodal functionality as a result of it appeared far off from the right solutions.

Winner: None

Associated Articles

Claude 3 Opus vs GPT-4 vs Gemini 1.5 Professional AI Fashions Examined

Mar 6, 2024

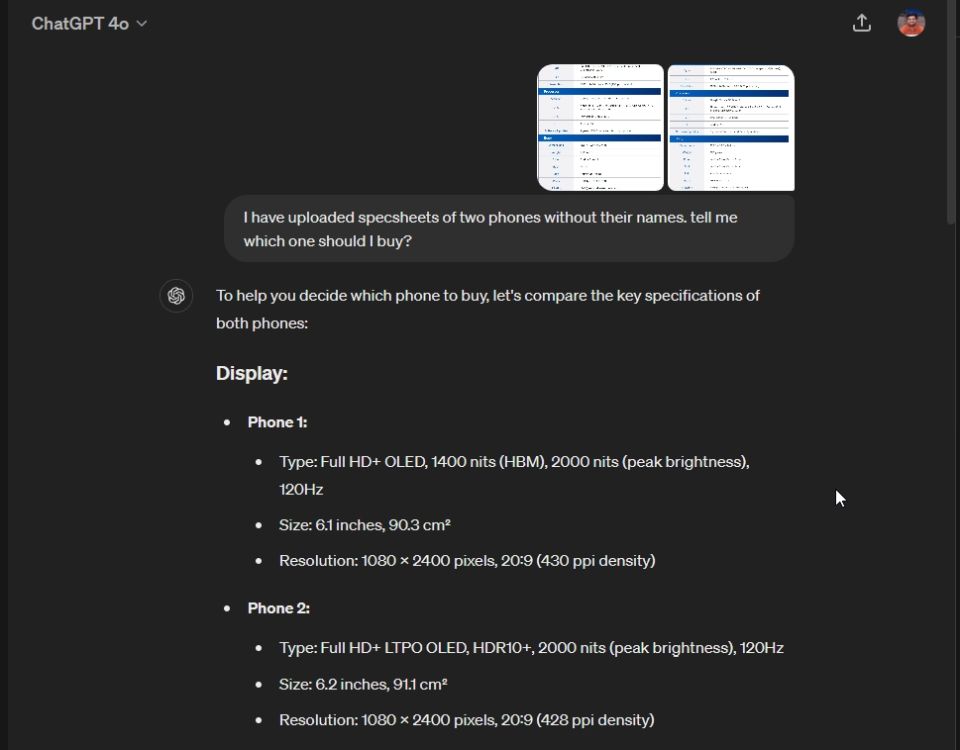

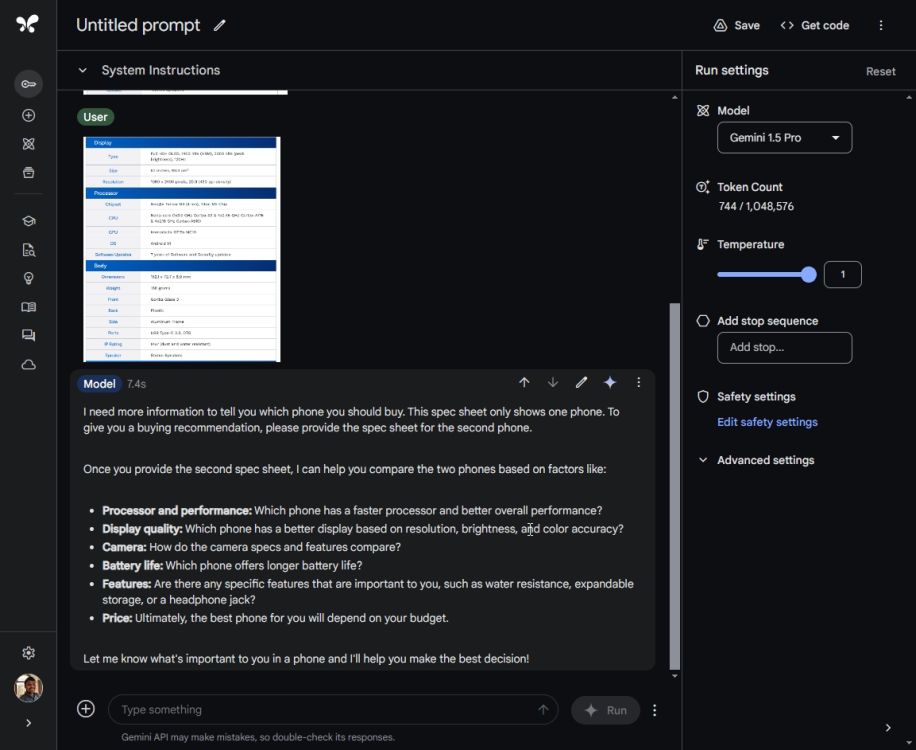

7. Character Recognition Check

In one other multimodal take a look at, I uploaded the specs of two telephones (Pixel 8a and Pixel 8) in picture format. I didn’t disclose the cellphone names, and neither the screenshots had cellphone names. Now, I requested ChatGPT 4o to inform me which cellphone ought to I purchase.

It efficiently extracted texts from the screenshots, in contrast the specs, and appropriately instructed me to get Cellphone 2, which was truly the Pixel 8. Additional, I requested it to guess the cellphone, and once more, ChatGPT 4o generated the appropriate reply — Pixel 8.

I did the identical take a look at on Gemini 1.5 Professional by way of Google AI Studio. By the best way, Gemini Superior doesn’t help batch add of pictures but. Coming to outcomes, effectively, it merely did not extract texts from each screenshots and saved asking for extra particulars. In checks like these, you discover that Google is to this point behind OpenAI with regards to getting issues accomplished seamlessly.

Winner: ChatGPT 4o

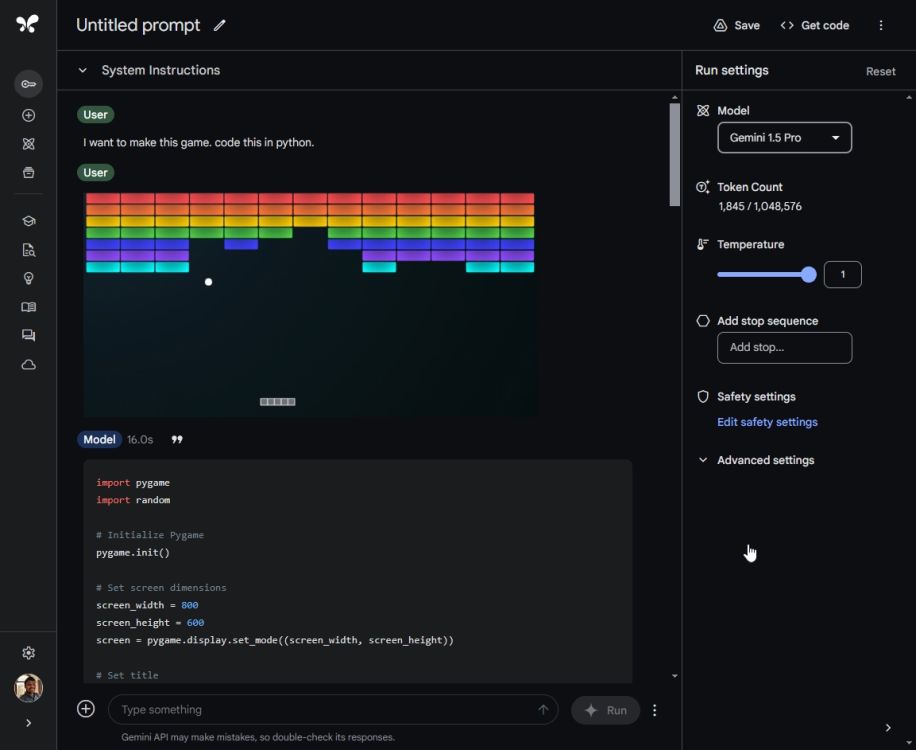

8. Create a Sport

Now to check the coding skill of ChatGPT 4o and Gemini 1.5 Professional, I requested each fashions to create a sport. I uploaded a screenshot of the Atari Breakout sport (after all, with out divulging the title), and requested ChatGPT 4o to create this sport in Python. In just some seconds, it generated your complete code and requested me to put in a further “pygame” library.

I pip put in the library and ran the code with Python. The sport launched efficiently with none errors. Superb! No back-and-forth debugging wanted. In reality, I requested ChatGPT 4o to enhance the expertise by including a Resume hotkey and it rapidly added the performance. That’s fairly cool.

Subsequent, I uploaded the identical picture on Gemini 1.5 Professional and requested it to generate the code for this sport. It generated the code, however upon operating it, the window saved on closing. I couldn’t play the sport in any respect. Merely put, for coding duties, ChatGPT 4o is far more dependable than Gemini 1.5 Professional.

Winner: ChatGPT 4o

Associated Articles

The Verdict

It’s evidently clear that Gemini 1.5 Professional is much behind ChatGPT 4o. Even after bettering the 1.5 Professional mannequin for months whereas in preview, it might’t compete with the most recent GPT-4o mannequin by OpenAI. From commonsense reasoning to multimodal and coding checks, ChatGPT 4o performs intelligently and follows directions attentively. To not miss, OpenAI has made ChatGPT 4o free for everybody.

The one factor going for Gemini 1.5 Professional is the large context window with help for as much as 1 million tokens. As well as, you may add movies too which is a bonus. Nevertheless, for the reason that mannequin is just not very sensible, I’m not positive many want to use it only for the bigger context window.

On the Google I/O 2024 occasion, Google didn’t announce any new frontier mannequin. The corporate is caught with its incremental Gemini 1.5 Professional mannequin. There isn’t any info on Gemini 1.5 Extremely or Gemini 2.0. If Google has to compete with OpenAI, a considerable leap is required.