What Is Generative AI and Why Is It Necessary?

The age of synthetic intelligence is right here, and Generative AI is taking part in a pivotal function in bringing unprecedented developments to on a regular basis expertise. There already are a number of free AI instruments that may help you in producing unimaginable pictures, texts, music, movies, and much more inside just a few seconds. Adobe’s AI Generative Fill in Photoshop and Midjourney’s superb capabilities have certainly startled us. However, what precisely is Generative AI and the way is it fueling such speedy innovation? To be taught extra, comply with our detailed explainer on Generative AI.

Definition: What’s Generative AI?

Because the identify suggests, Generative AI means a kind of AI expertise that may generate new content material based mostly on the information it has been educated on. It may well generate texts, pictures, audio, movies, and artificial knowledge. Generative AI can produce a variety of outputs based mostly on person enter or what we name “prompts“. Generative AI is principally a subfield of machine studying that may create new knowledge from a given dataset.

If the mannequin has been educated on massive volumes of textual content, it might probably produce new combos of natural-sounding texts. The bigger the information, the higher would be the output. If the dataset has been cleaned previous to coaching, you might be prone to get a nuanced response.

Equally, you probably have educated a mannequin with a big corpus of pictures with picture tagging, captions, and many visible examples, the AI mannequin can be taught from these examples and carry out picture classification and technology. This subtle system of AI programmed to be taught from examples known as a neural community.

That mentioned, there are completely different sorts of Generative AI fashions. These are Generative Adversarial Networks (GAN), Variational Autoencoder (VAE), Generative Pretrained Transformers (GPT), Autoregressive fashions, and far more. We’re going to briefly focus on these generative fashions under.

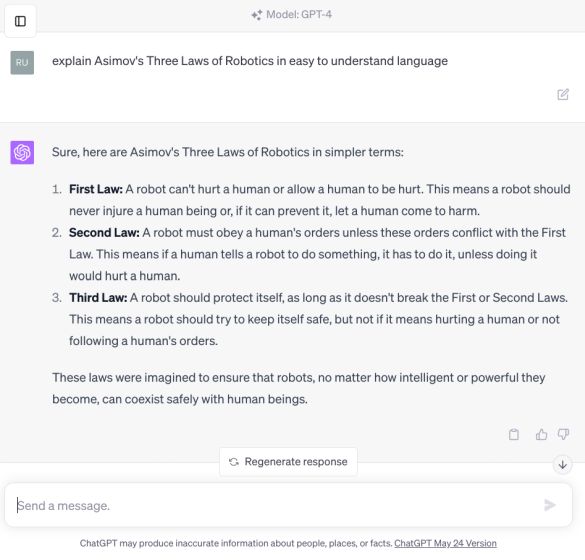

At current, GPT fashions have gotten well-liked after the discharge of GPT-4/3.5 (ChatGPT), PaLM 2 (Google Bard), GPT-3 (DALL – E), LLaMA (Meta), Steady Diffusion, and others. All of those user-friendly AI interfaces are constructed on the Transformer structure. So on this explainer, we’re going to primarily concentrate on Generative AI and GPT (Generative Pretrained Transformer).

What Are the Totally different Sorts of Generative AI Fashions?

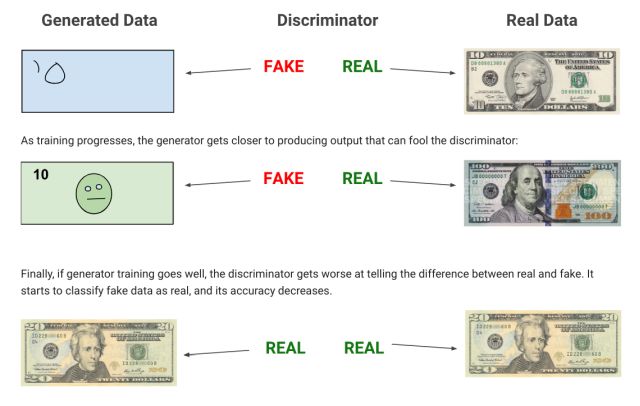

Amongst all of the Generative AI fashions, GPT is favored by many, however let’s begin with GAN (Generative Adversarial Community). On this structure, two parallel networks are educated, of which one is used to generate content material (referred to as generator) and the opposite one evaluates the generated content material (referred to as discriminator).

Principally, the intention is to pit two neural networks in opposition to one another to supply outcomes that mirror actual knowledge. GAN-based fashions have been principally used for image-generation duties.

Subsequent up, we have now the Variational Autoencoder (VAE), which includes the method of encoding, studying, decoding, and producing content material. For instance, you probably have a picture of a canine, it describes the scene like colour, dimension, ears, and extra, after which learns what sort of traits a canine has. After that, it recreates a tough picture utilizing key factors giving a simplified picture. Lastly, it generates the ultimate picture after including extra selection and nuances.

Shifting to Autoregressive fashions, it’s near the Transformer mannequin however lacks self-attention. It’s principally used for producing texts by producing a sequence after which predicting the following half based mostly on the sequences it has generated to date. Subsequent, we have now Normalizing Flows and Power-based Fashions as nicely. However lastly, we’re going to discuss in regards to the well-liked Transformer-based fashions intimately under.

What Is a Generative Pretrained Transformer (GPT) Mannequin

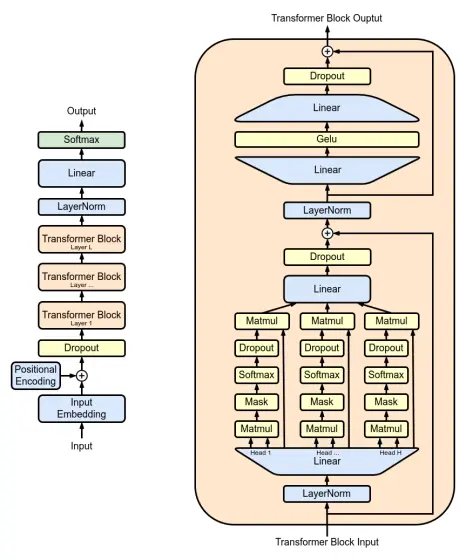

Earlier than the Transformer structure arrived, Recurrent Neural Networks (RNNs) and Convolutional Neural Networks (CNNs) like GANs, and VAEs have been extensively used for Generative AI. In 2017, researchers working at Google launched a seminal paper “Attention is all you need” (Vaswani, Uszkoreit, et al., 2017) to advance the sphere of Generative AI and make one thing like a big language mannequin (LLM).

Google subsequently launched the BERT mannequin (Bidirectional Encoder Representations from Transformers) in 2018 implementing the Transformer structure. On the similar time, OpenAI launched its first GPT-1 mannequin based mostly on the Transformer structure.

So what was the important thing ingredient within the Transformer structure that made it a favourite for Generative AI? Because the paper is rightly titled, it launched self-attention, which was lacking in earlier neural community architectures. What this implies is that it principally predicts the following phrase in a sentence utilizing a technique referred to as Transformer. It pays shut consideration to neighboring phrases to know the context and set up a relationship between phrases.

Via this course of, the Transformer develops an inexpensive understanding of the language and makes use of this data to foretell the following phrase reliably. This entire course of known as the Consideration mechanism. That mentioned, remember that LLMs are contemptuously referred to as Stochastic Parrots (Bender, Gebru, et al., 2021) as a result of the mannequin is just mimicking random phrases based mostly on probabilistic selections and patterns it has discovered. It doesn’t decide the following phrase based mostly on logic and doesn’t have any real understanding of the textual content.

Coming to the “pretrained” time period in GPT, it signifies that the mannequin has already been educated on an enormous quantity of textual content knowledge earlier than even making use of the eye mechanism. By pre-training the information, it learns what a sentence construction is, patterns, details, phrases, and so on. It permits the mannequin to get a superb understanding of how language syntax works.

How Google and OpenAI Method Generative AI?

Each Google and OpenAI are utilizing Transformer-based fashions in Google Bard and ChatGPT, respectively. Nevertheless, there are some key variations within the strategy. Google’s newest PaLM 2 mannequin makes use of a bidirectional encoder (self-attention mechanism and a feed-forward neural community), which suggests it weighs in all surrounding phrases. It basically tries to know the context of the sentence after which generates all phrases directly. Google’s strategy is to basically predict the lacking phrases in a given context.

In distinction, OpenAI’s ChatGPT leverages the Transformer structure to foretell the following phrase in a sequence – from left to proper. It’s a unidirectional mannequin designed to generate coherent sentences. It continues the prediction till it has generated an entire sentence or a paragraph. Maybe, that’s the explanation Google Bard is ready to generate texts a lot sooner than ChatGPT. However, each fashions depend on the Transformer structure at their core to supply Generative AI frontends.

Functions of Generative AI

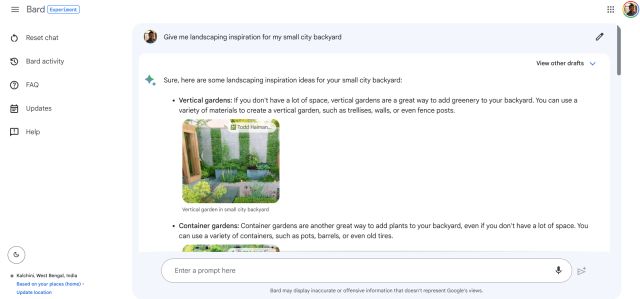

Everyone knows that Generative AI has an enormous utility not only for textual content, but in addition for pictures, movies, audio technology, and far more. AI chatbots like ChatGPT, Google Bard, Bing Chat, and so on. leverage Generative AI. It will also be used for autocomplete, textual content summarization, digital assistant, translation, and so on. To generate music, we have now seen examples like Google MusicLM and just lately Meta launched MusicGen for music technology.

Other than that, from DALL-E 2 to Steady Diffusion, all use Generative AI to create practical pictures from textual content descriptions. In video technology too, Runway’s Gen-1, StyleGAN 2, and BigGAN fashions depend on Generative Adversarial Networks to generate lifelike movies. Additional, Generative AI has functions in 3D mannequin generations and among the well-liked fashions are DeepFashion and ShapeNet.

Not simply that, Generative AI may be of big assist in drug discovery too. It may well design novel medicine for a selected illness. We now have already seen drug discovery fashions like AlphaFold, developed by Google DeepMind. Lastly, Generative AI can be utilized for predictive modeling to forecast future occasions in finance and climate.

Limitations of Generative AI

Whereas Generative AI has immense capabilities, it’s not with none failings. First off, it requires a big corpus of information to coach a mannequin. For a lot of small startups, high-quality knowledge won’t be available. We now have already seen firms corresponding to Reddit, Stack Overflow, and Twitter closing entry to their knowledge or charging excessive charges for the entry. Lately, The Web Archive reported that its web site had turn out to be inaccessible for an hour as a result of some AI startup began hammering its web site for coaching knowledge.

Other than that, Generative AI fashions have additionally been closely criticized for lack of management and bias. AI fashions educated on skewed knowledge from the web can overrepresent a bit of the group. We now have seen how AI picture turbines principally render pictures in lighter pores and skin tones. Then, there’s a large concern of deepfake video and picture technology utilizing Generative AI fashions. As earlier acknowledged, Generative AI fashions don’t perceive the that means or impression of their phrases and normally mimic output based mostly on the information it has been educated on.

It’s extremely probably that regardless of finest efforts and alignment, misinformation, deepfake technology, jailbreaking, and subtle phishing makes an attempt utilizing its persuasive pure language functionality, firms can have a tough time taming Generative AI’s limitations.