In Today’s AI Race, Don’t Gamble with Your Digital Privacy

There isn’t any doubt that we live within the AI age, with chatbots and single-use AI {hardware} being launched left and proper. Within the coming years, AI is simply going to embody each aspect of our lives. AI corporations are relentlessly gathering information, each public and private, to coach and enhance their fashions. Nevertheless, on this course of, we’re making a gift of our private info which can put our privateness in danger. So, I appeared into the privateness insurance policies of standard AI chatbots and companies and have really helpful the perfect ways in which you as a person can shield your privateness.

How Common AI Chatbots Deal with Your Knowledge

Google Gemini (Previously Bard)

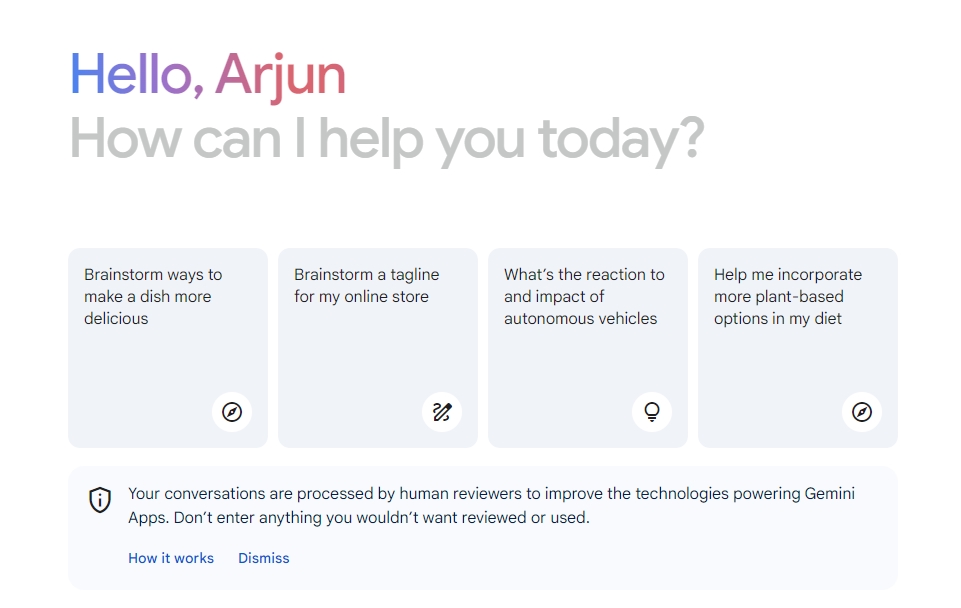

To start with, Google’s Gemini shops all of your exercise information by default. It doesn’t search the person’s specific consent earlier than storing the info. Google says all of your interactions and actions on Gemini are saved for as much as 18 months. As well as, your Gemini chats are processed by human reviewers who learn and annotate the dialog to enhance Google’s AI mannequin. The Gemini Apps Privateness Hub page reads:

To assist with high quality and enhance our merchandise (corresponding to generative machine-learning fashions that energy Gemini Apps), human reviewers learn, annotate, and course of your Gemini Apps conversations.

Google additional asks customers to not share something confidential or private that they don’t need the reviewers to see or Google to make use of. On the Gemini homepage, a dialog seems informing the person about this. Aside from conversations, your location particulars, IP tackle, gadget kind, and residential/ work tackle out of your Google account are additionally saved as a part of Gemini Apps exercise.

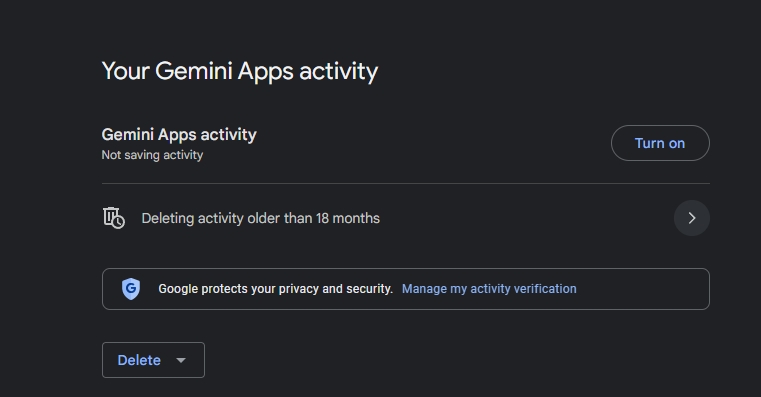

Knowledge Retention Coverage

That mentioned, Google says that your information is anonymized by disassociating your Google account from conversations to guard your privateness. Google additionally affords the choice to show off Gemini Apps Exercise and allows you to delete all of your Gemini-related information. Nevertheless, issues get a bit murky right here.

As soon as your conversations have been evaluated or annotated by human reviewers, they don’t get deleted even in case you delete all of your previous Gemini information. Google retains the info for three years. The web page reads:

Conversations which have been reviewed or annotated by human reviewers (and associated information like your language, gadget kind, location data, or suggestions) will not be deleted whenever you delete your Gemini Apps exercise as a result of they’re stored individually and will not be related to your Google Account. As a substitute, they’re retained for as much as three years.

As well as, even when your Gemini Apps Exercise is turned off, Google shops your dialog for 72 hours (three days) to “present the service and course of any suggestions“.

As for uploaded pictures, Google says textual info interpreted from a picture is saved, and never the picture itself. Nevertheless, goes on to say, “Presently [emphasis added], we don’t use the precise pictures you add or their pixels to enhance our machine-learning technologies”.

Sooner or later, Google would possibly use uploaded pictures to enhance its mannequin so you ought to be cautious and chorus from importing private photographs on Gemini.

Really useful Articles

Allow and Use Google Bard Extensions

Sep 20, 2023

You Can Now Add Photographs to Google Bard; Right here Are Some Cool Examples

Jul 20, 2023

If in case you have enabled the Google Workspace extension in Gemini, then your private information accessed from apps like Gmail, Google Drive, and Docs, don’t undergo human reviewers. These private information will not be utilized by Google to coach its AI mannequin. Nevertheless, the info is saved till the “time interval wanted to offer and preserve Gemini Apps companies“.

In case you use different extensions corresponding to Google Flights, Google Lodges, Google Maps, and YouTube, the related conversations are reviewed by people so maintain that in thoughts.

OpenAI ChatGPT

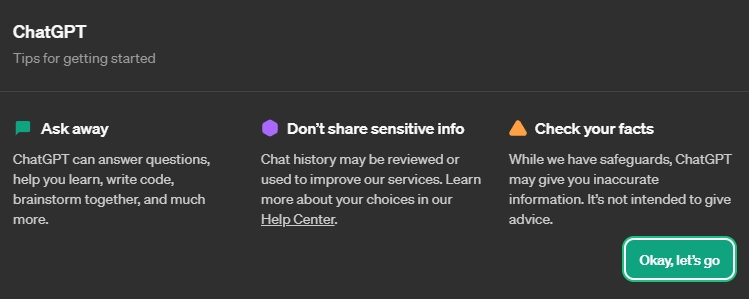

OpenAI’s ChatGPT is by far the most well-liked AI chatbot utilized by customers. Just like Gemini, ChatGPT additionally saves all of your conversations by default. However in contrast to Gemini, it solely informs the person to not share delicate info for the primary time after a brand new person indicators up.

There may be no static banner on the homepage informing the person that your information might be used for reviewing conversations or to coach the mannequin.

However, coming to what sort of private information ChatGPT collects from customers, it shops your conversations, pictures, information, and content material from Dall-E for mannequin coaching and bettering efficiency. In addition to that, OpenAI additionally collects IP addresses, utilization information, gadget info, geolocation information, and many others. This is applicable to each free ChatGPT customers and paid ChatGPT Plus customers.

OpenAI says content material from enterprise plans like ChatGPT Workforce, ChatGPT Enterprise, and API Platform will not be used to coach and enhance its fashions.

Really useful Articles

ChatGPT Turns One: A Historical past of OpenAI’s Groundbreaking Chatbot

Nov 30, 2023

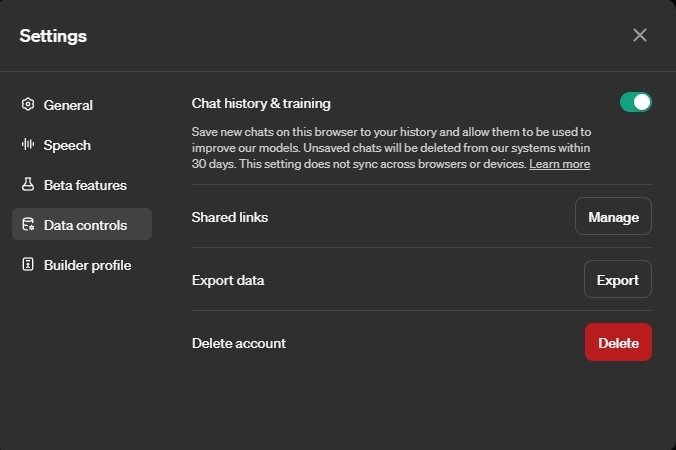

OpenAI does allow you to disable chat historical past and coaching in ChatGPT from Settings -> Knowledge controls. Nevertheless, the setting to disable chat historical past and coaching doesn’t sync with different browsers and gadgets the place you might be utilizing ChatGPT with the identical account. Thus, to disable historical past and coaching, it’s good to open the settings and disable it on each gadget the place you employ ChatGPT.

After you have disabled chat historical past, new chats gained’t seem within the sidebar and so they gained’t be used for mannequin coaching. Nevertheless, OpenAI will retain chats for 30 days to watch for abuse, and in that interval, it gained’t be used for mannequin coaching.

As for whether or not human reviewers are utilized by OpenAI to view conversations, OpenAI says:

“A restricted variety of approved OpenAI personnel, in addition to trusted service suppliers which are topic to confidentiality and safety obligations, could entry person content material solely as wanted for these causes: (1) investigating abuse or a safety incident; (2) to offer assist to you in case you attain out to us with questions on your account; (3) to deal with authorized issues; or (4) to enhance mannequin efficiency (except you could have opted out). Entry to content material is topic to technical entry controls and restricted solely to approved personnel on a need-to-know foundation. Moreover, we monitor and log all entry to person content material and approved personnel should bear safety and privateness coaching previous to accessing any person content material.”

So sure, similar to Google, OpenAI additionally employs human reviewers to view conversations and practice/enhance their fashions, by default. OpenAI doesn’t disclose this info on ChatGPT’s homepage which looks as if an absence of transparency on OpenAI’s half.

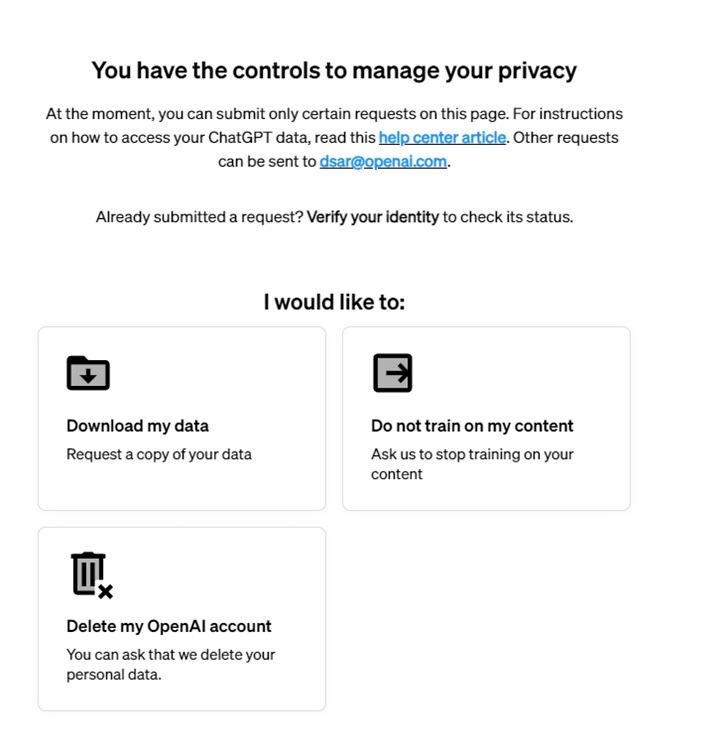

You might have the choice to decide out and ask OpenAI to cease coaching in your content material whereas retaining the Chat historical past characteristic intact. Nevertheless, OpenAI doesn’t provide entry to this privacy portal beneath the Settings web page. It’s buried deep beneath OpenAI’s documentation which common customers can’t discover very simply. No less than, on the transparency level, Google does a greater job than OpenAI.

Really useful Articles

There’s a Secret Strategy to Decide-Out of ChatGPT Mannequin Coaching!

Apr 3, 2024

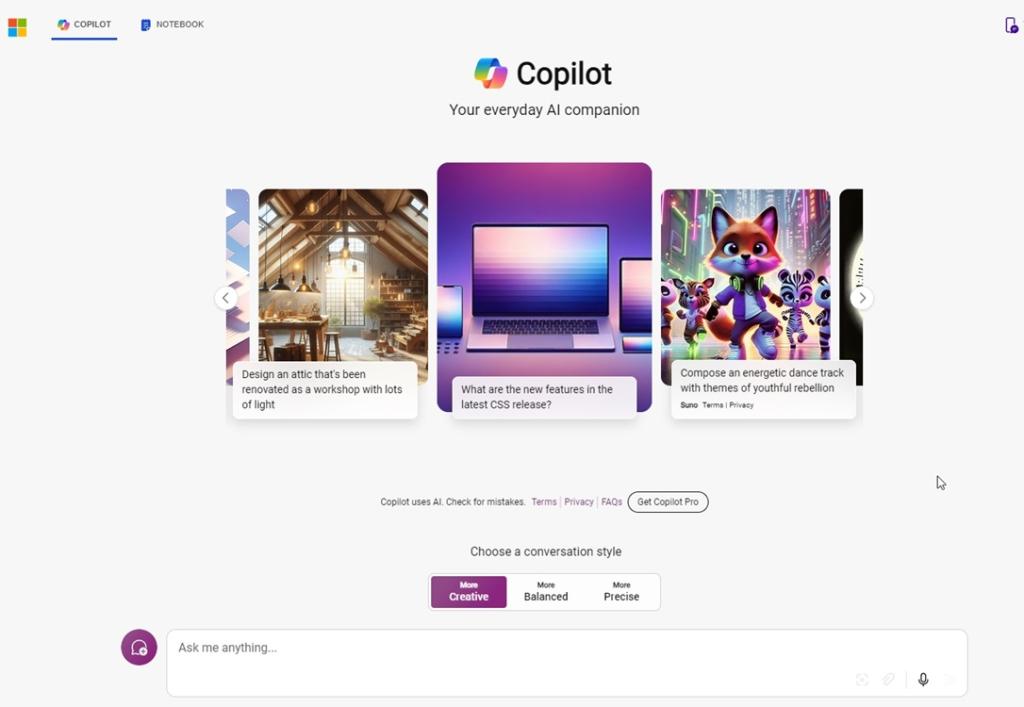

Microsoft Copilot

Of all of the companies, I discovered the privateness coverage of Microsoft Copilot to be the most convoluted. It doesn’t lay naked the specifics of what private information is collected and the way these information are dealt with by Microsoft.

On the Microsoft Copilot FAQ web page, it says you can disable personalization aka chat historical past. Nevertheless, there is no such thing as a such setting on the Copilot web page. There may be an choice to clear all your Copilot activity history from the Microsoft account web page, however that’s all.

The one benefit of Copilot is that it doesn’t personalize your interplay if it deems the immediate delicate. And it additionally doesn’t save the dialog if the knowledge appears to be personal.

If you’re a Copilot Professional person, Microsoft makes use of information from Workplace apps to ship new AI experiences. If you wish to disable it, disable Related Expertise from any one of many Workplace apps. Head over to Account -> Handle Settings beneath Account Privateness and switch off Related Experiences.

Really useful Articles

Microsoft Copilot Makes GPT-4 Turbo Free for Everybody to Use

Mar 14, 2024

You Can Now Add Recordsdata to Copilot on Home windows 11; Right here’s How

Mar 12, 2024

Remini, Runway, and Extra

Remini is likely one of the hottest AI picture enhancers on the market with thousands and thousands of customers. Nevertheless, its privateness coverage is fairly dicey and customers ought to be conscious earlier than importing their private photographs on such apps.

Its information retention coverage says that non-public information processed is stored for two to 10 years by the corporate, which is kind of lengthy. Whereas pictures, movies, and audio recordings are deleted from its server after 15 days, processed facial information are delicate in nature and are stored for a few years. As well as, all of your information could be handed over to third-party distributors or firms in case of a merger or acquisition.

Equally, Runway, a well-liked AI device that offers with pictures and movies, retains information for as much as three years. Lensa, a well-liked AI picture editor, additionally doesn’t delete your information till you delete your Lensa account. It’s important to electronic mail the corporate to delete your account.

Really useful Articles

10 Greatest AI Video Mills (Textual content-to-Video AI Instruments)

Jun 29, 2023

There are lots of such AI instruments and companies that retailer private information, significantly processed information from pictures and movies, for lengthy years. If you wish to keep away from such companies, search for AI picture instruments that may be run regionally. There are apps like SuperImage (visit) and Upscayl (visit) that let you improve photographs regionally.

Knowledge Sharing with Third-parties

So far as information sharing is anxious with third events, Google doesn’t point out whether or not human reviewers who course of conversations are a part of Google’s in-house staff or third-party distributors. Usually, the trade norm is to outsource these sorts of labor to third-party distributors.

However, OpenAI says, “We share content material with a choose group of trusted service suppliers that assist us present our companies. We share the minimal quantity of content material we’d like with a purpose to accomplish this goal and our service suppliers are topic to strict confidentiality and safety obligations.”

OpenAI explicitly mentions that its in-house reviewers together with trusted third-party service suppliers view and course of content material, though the info is de-identified. As well as, the corporate doesn’t promote information to 3rd events and conversations will not be used for advertising and marketing functions.

On this regard, Google additionally says that conversations are not used to point out advertisements. Nevertheless, if this modifications sooner or later, Google will clearly talk the change to customers.

Dangers of Private Knowledge in Coaching Dataset

There are quite a few dangers related to private information making its manner into the coaching dataset. To start with, it violates the privateness of people who could not have expressly given consent to coach fashions on their private info. This may be significantly invasive if the service supplier shouldn’t be speaking the privateness coverage to the person transparently.

Aside from that, the commonest threat is information breach of confidential information. Final 12 months, Samsung banned its employees from utilizing ChatGPT because the chatbot was leaking delicate information in regards to the firm. Even supposing the info is anonymized, there are numerous prompting strategies to drive the AI mannequin to disclose delicate info.

Lastly, information poisoning can be a reputable threat. Researchers say that attackers could add malicious information into conversations which can skew the mannequin output. It will possibly additionally add dangerous biases which can compromise the safety of AI fashions. Founding staff member of OpenAI, Andrej Karpathy has defined information poisoning in in depth element here.

Is There Any Decide-out Mechanism?

Whereas main service suppliers like Google and OpenAI present customers a technique to decide out of mannequin coaching, within the course of, additionally they disable chat historical past. It looks as if corporations are punishing customers for selecting privateness over performance.

Corporations can very nicely provide the chat historical past which can assist customers discover vital conversations from the previous, whereas not being a part of the coaching dataset.

OpenAI, in truth, lets customers decide out of mannequin coaching, nevertheless it doesn’t promote the characteristic prominently, and it’s nowhere to be discovered on ChatGPT’s settings web page. It’s important to head to its privateness portal and ask OpenAI to cease coaching in your content material whereas retaining your chat historical past intact.

Google doesn’t provide any such choice which is disappointing. Privateness shouldn’t come at the price of dropping useful performance.

What are the Alternate options?

Coming to alternate options and methods to attenuate your information footprint, nicely, initially, you could have the choice to disable chat historical past. On ChatGPT, you’ll be able to maintain chat historical past and decide out of mannequin coaching by way of its privateness portal web page.

Aside from that, in case you are critical about your privateness, you’ll be able to run LLMs (massive language fashions) in your laptop regionally. Many open-source fashions on the market run on Home windows, macOS, and Linux, even on mid-range computer systems. We now have a devoted in-depth information on find out how to run an LLM regionally in your laptop.

You too can run Google’s tiny Gemma mannequin in your laptop regionally. And if you wish to ingest your individual personal paperwork, you’ll be able to try PrivateGPT which runs in your laptop.

General, in in the present day’s AI race the place corporations are trying to scrape information from each nook of the web and even generate artificial information, it’s upon us to safeguard our private information. I’d strongly advocate customers not feed or add private information on AI companies to protect their privateness. And AI corporations shouldn’t low cost priceless functionalities for selecting privateness. Each can co-exist.